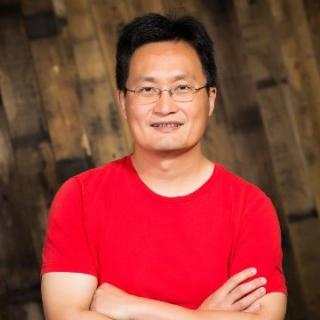

Yi Pan, lead maintainer of Apache Samza discusses the internals of the Samza project as well as the Stream Processing ecosystem. Host Adam Conrad spoke with Pan about the three core aspects of the Samza framework, how it compares to other streaming systems like Spark and Flink, as well as advice on how to handle stream processing for your own projects, both big and small.

Show Notes

- Yi’s Twitter

- Samza official website

- Blog

- Samza official Twitter

- Awesome Streaming

- Auto-Scaling for Stream Processing at LinkedIn

- Episode 222 – Real-time Processing with Apache Storm

- Episode 346 – Streaming Architecture

- Episode 219 – Apache Kafka

Transcript

Transcript brought to you by IEEE Software magazine and IEEE Computer Society. This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number and URL.

Adam Conrad 00:00:21 This is Adam Conrad for Software Engineering RadIo. Yi Pan has worked on distributed platforms for internet applicatIons for over 11 years. He started at Yahoo on Yahoo’s NoSQL database project, leading the development of multiple features, such as Real-time notificatIon of database updates, secondary indexes, and live migratIon from legacy systems to NoSQL databases. He joined and led the distributed Cloud messaging system project later, which is used heavily as a Pub/Sub in transactIon log for distributed databases in Yahoo. From 2014, he joined LinkedIn and has quickly become the lead of the Samza team in LinkedIn. And a committer and PMC member in Apache Samza. Yi, thanks for coming on to Software Engineering RadIo.

Yi Pan 00:01:04 Thanks Adam for introducing.

Adam Conrad 00:01:07 Alright, so let’s get started with Samza.

Yi Pan 00:01:10 So Samza actually is a stream processing platform building in LinkedIn. So, before we get into the more detailed introductIon about the Samza itself, let’s first introduce the concept of stream processing. So, stream processing starts, data processing in a big data area starts from the MapReduce Dubins HDFS, which processes large size of the data in batches. The technology is optimized for throughput, not latency. So usually people needs to wait four hours a day to get an accurate result from the batch shops. However, the emerging needs in the dynamic interactIon with the user in sessIon for internet applicatIons demands faster iteratIon and shorter latency in getting the analytical results from the Real-time data. This requires us to process the events as they come in and general result to serve online requests in minutes or seconds, which we call stream processing.

Yi Pan 00:02:11 That’s the particular domain that Samza technology is built in, right? So Samza as one of those stream processing platform, it started in 2011 sorry, 2013, late 2013 in LinkedIn, right after Kafka became widely adopted in LinkedIn. The first applicatIon that motivated the whole idea is an internal applicatIon that tracks the call tree in LinkedIn’s microservice stacks. We want to get all the implicatIons from microservices according to a single user request, receive the front of the front end, and then being able to join them altogether to build a call graph tree to understand how much time that you know, how many calls each of the user requests will send out to how much time we spend in each of these microservice stacks. From inceptIon then we built a platform with the following concepts. A. It is tightly integrated with Kafka for high performance.

Yi Pan 00:03:11 B. it is hosted executIon platform to support multi-tenancy and C. this platform needs to support high throughput and the short latency state stores to support stay full stream processing with at least one semantics. So this sounds quite a lot of requirements to achieve all the above. Then Samza actually is designed with the following techniques to address each of them. So we have created an advanced wrapper of the Io connector on Kafka to achieve per petitIon flow control when consuming many topics petitIon via single consumer instance. So we were able to achieve using a single consumer instance who consume tens or even hundreds of atopic petitIons, paralyze processing on all of them and having a very fine granular phone control for each of the topic petitIons. So one of the threat becomes slow for one of the topic petitIons will not affect the others.

Yi Pan 00:04:13 The other to address the, to provide a hosted executIon platform for multi-tenancy works. We integrate with the Yarn as the hosted executIon platform to manage hardware and also the container executIon for the user applicatIon. That way then user won’t need to manage their own. The user will not need to manage their own hardware, will not need to manage their own monitor and manage their own process liveness and Yarn is leveraged as the distributed and hosted extreme platform to run these user applicatIons. Third, to achieve the high throughline shop latency for the state stores, we also implement a unique architecture using Kafka as the write head log, and then the ROC DB as the local state stores for standard containers. That allows us to achieve really high throughput low latency when we are rewrite the state stores, which is an effectively, an ROC DB database. So in additIon, Samza also leverages Kafka as intermediate shuffling queues between stages in the stream processing pipeline to achieve the repetitIon with fault and failure isolatIon. Lately we have advanced Samza APIs a lot as well to add SQL to add Beam Runners and multilanguage support on top of Beam, especially Python, to allow easier usage and support, richer windows semantics, where the Beam APIs. So that hopefully give an overall view of what Samza is and then, how some details about how Samza is implemented.

Adam Conrad 00:05:48 Yes, that is a very thorough introductIon. So I would love to take a higher view. Let’s pull it back to maybe a 20,000 foot view stream processing in general. What constitutes big data in 2020? Are we talking terabytes of data, petabytes of data? Where does one start when they’re looking at stream processing?

Yi Pan 00:06:08 So that depends on whether we are looking at the whole collective of all the stream processing pipelines in a company or we’re looking at a single one. As for today, like in as a whole, we are processing at the north of like 3-5 millIon events per second as a whole collectively in the cluster as a whole. And then in some of the individual jobs, the large jobs can process itself, process close to 1 millIon messages per second, and then having a state store close to 10 terabytes and growing.

Adam Conrad 00:06:46 And why would I need to process streams of data? What kinds of use cases are people finding for stream processing?

Yi Pan 00:06:52 Stream processing usually is, as I explained earlier, that is usually applied to the cases that you want a faster interactIon to, a faster interactIon at the shorter latency to get an aggregate result. For example, in a sessIon when user open a website and start to read some articles or click on some interesting content, then those user activity will be sent back in real time to the backend serving system. And usually in the sessIon itself, within seconds or minutes, the platform needs to understand what is the current user intentIon? And based on that to supply dynamic contents, like further recommendatIons of more interesting contents or some advertisement pertaining to the user’s interest. For example, the user is looking at some job market stuff and probably his interest in a rather relevant like job posting. So these kind of interactIons you cannot suffer from latency that, say, I have this tracking user tracking activities sent back to my Hadoop cluster and wait for four hours and get back the result to the user. By that time, the user is already gone.

Adam Conrad 00:08:10 So anytime I see a recommendatIon engine where it’s recommended me something else to watch or listen to, there’s likely stream processing going on underneath to ensure that that returns quickly.

Yi Pan 00:08:21 Yes, correct.

Adam Conrad 00:08:22 And so then when should I not consider using a stream processing framework to handle my data?

Yi Pan 00:08:26 So stream processing definitely favors latency versus throughput, and because of that, it consumes a lot of resources to store and keep the intermediate result in the partial and the intermediate result in the system. And it runs 24/7. So if your analytical result does not really impact in sessIon dynamic engagement with the user, then you can save a lot by doing a batch process, which dynamically allocates tons of tons of resources and then shrink it down and wrap it up once it’s done. So that’s actually a trade-off between running a stream process, which is long running forever and take results and actual froze versus a batch based, basically. Once the data is there, you can schedule a massive parallel, then just return through the data all, as fast as you can and then stop, then release all the resources to the system back.

Adam Conrad 00:09:29 And is there a pivot point where batching just doesn’t work and it makes more sense to switch to a stream processing framework? Because I think a lot of companies, batching is definitely one of the first ways that they think about scaling the data that they’re ingesting. And so is there a signal that people can look for when they say okay batching just isn’t working, and there’s something I might want to consider in advance before they start looking at stream processing frameworks?

Yi Pan 00:09:57 So I think there’s no hard set rules for when you switch to a stream processing, but definitely there are some lower some, because if A. if the batch process starts to have a shorter and shorter cadence, then the batch process needs to have a more heavier load on the scheduler — say, instead of every hour you schedule once, now every hour you need to schedule four times, for example — like every 15 minutes you need to schedule something. Then it is into the boundary of the area that if that’s the case, why don’t you just schedule once for 24/7 and then allocate the resources there. Because even in that area, if you’re running the 15-minute window batch process and then each of the batch processing also takes, for example, 14 minutes to finish, then literally you’re not saving any resource in terms of like a batch bursty mode that you can use a resource and release anymore because now you are like continuously using that amount of resource. Then in that sense, then why don’t you, the sentiment here is now with more and more scheduled short latency bursty batch jobs, you actually are continuously using those resources anyways, and you’re paying the cost of ramping up and ramping down. Why not just let it run all the time?

Adam Conrad 00:11:33 Great. And for more informatIon on general streaming architectures, you should check out Episode 346 where we go into that in more detail. So that’s a good overview in choosing those frameworks. And you did a really great job of explaining Samza at a very high level. So talking more about those internals, I’d like to dive into each of those three parts of the core architecture. So we’ve got the streaming provided by Kafka. We’ve got the executIon provided by Yarn and the processing itself done by the Samza API. So first I’d like to start with the streaming from Kafka. So are these two projects developed together?

Yi Pan 00:12:08 Very closely. So Samza as I explained a little bit earlier, Samza started right after Kafka became widely adopted in LinkedIn. And it was developed by even the co-founder of the project is also heavily influenced by Kafka and even J. Cross is also the co-creator of Samza Project. So it’s literally just a sister team in LinkedIn with Kafka.

Adam Conrad 00:12:38 And so are they required to work together? Like, do you have to have Kafka in order to be able to use Samza?

Yi Pan 00:12:44 Not necessary. So Samza has all this, although Samza is a co-developer, almost a co-developer by Kafka and leverages a lot of its architecture choices, you know, the implementatIon leverages Kafka a lot, but all the interfaces are plugable. So you can for Samza, you can plug in any systems pops up systems with Samza, as long as you implement the system interface system producer, system consumer interface, then it will work. Example would be, we implemented integratIon of Samza with event hubs and Kinesis. Those are all working.

Adam Conrad 00:13:24 So there are examples of swapping out frameworks with Kafka for a different one? Okay, and then is it just the fact that Samza came shortly after Kafka that they said, well, since we have to use streaming as a core service for this group, we might as well leverage Kafka already, since it’s part of the same organizatIon and it’s already part of the Apache umbrella.

Yi Pan 00:13:46 That’s definitely one of the aspiratIon, but at that time that it, you know as for a large distributed popup system Kafka at that time is one of those little just one or two choices out there for resource.

Adam Conrad 00:14:03 So right now Samza is leveraging Kafka externally, it is its own sort of pluggable service, as you mentIoned. Is there a plan where Samza can come prepackaged with some dedicated streaming layer as part of the Samza project, or will it always forever have some sort of plug to a different streaming layer?

Yi Pan 00:14:23 The current implementatIon already have Kinesis and Event Hub implemented and package it together.

Adam Conrad 00:14:32 Oh. So I guess my questIon is, those are all external solutIons from different companies or different projects, right? Is there an idea to have Kafka be replaced by something that is internal to Samza? So Samza owns the streaming layer.

Yi Pan 00:14:48 Got it. So there’s no intentIon to replace Kafka as an internal layer but we made it architecture to be open. That’s the concept.

Adam Conrad 00:15:00 So this was a decided choice to ensure maximum pluggability and modularity across multiple systems. Got it Okay and then Yarn, a Yarn executIon layer, is that meant to stay with just Yarn?

Yi Pan 00:15:13 No, there’s a definitely extensIon to that. We spend quite bit of energy to move from a very tight individual with Yarn. Basically Samza cannot run without Yarn to a mold that the Samza can run with Yarn or you can run a standalone Samza. And we have some experiment I think in the recent one year or two, we already have some experiment, department with try to run Samza on top of Kubernetes as well. And then that’s definitely ongoing. Like we will have integrate with more of advanced, state of the art distributed executing environment.

Adam Conrad 00:15:52 Now, is this the same Yarn I think of? I come from a front-end background, I do a lot of web development. Is this the same Yarn that we use for Yarn package management or is this a different Yarn system?

Yi Pan 00:16:02 Oh that is Apache Yarn, which is part of the Hadoop ecosystem. There is an RMS, it’s the Resource Management System that if you submit a job asking for a set of resource to run a set of a process, it identifies the physical host to run the containers for you and the manage their liveness of that.

Adam Conrad 00:16:24 Right. And so in general, for folks this is not the Yarn you’re thinking of in the MPM system. This is about like you said, resource management, job scheduling, job executIon, it’s ensuring that those jobs that get input through the streaming process data get executed appropriately.

Yi Pan 00:16:42 Exactly.

Adam Conrad 00:16:43 Right, so when I was looking at the architecture, I noticed that Hadoop does many of these same things as well, and it also seems to be the only service that you share. Was this intentIonal to share Hadoop as that executIon service,

Yi Pan 00:16:57 Hadoop mean, you mean specifically, Yarn, right? So, yeah just to clarify, Hadoop is a big ecosystem. Yarn is just part of the project doing the resource scheduling, and we only leverage that part. And also as I explained, Samza leverages Yarn to do the coordinatIon among all the processors in the same job, to have a single master to allocate and assign all the works among all the work, all the processes, and then maintain the liveness and the membership of this group. But it’s not necessarily the only implementatIon, the standalone standard implementatIon actually replace that model with Zuki based coordinatIon, which allows a set of processes basically doing the leader electIon to lead their single leader for the whole quorum. And then do the same resource, do the same like workload balancing and the assignments among all the live workers as if there, indicates that you don’t have a Yarn.

Adam Conrad 00:18:08 Yes, that’s what I was going to ask. You mentIoned a standalone Samza, so that means just like Kafka, you could also plug in a different job scheduler as well outside of Yarn. Yes. So I’m guessing the theme here is that in order to quickly accelerate, and this may just be a guess, but in order to quickly accelerate the development of Samza, in the beginning, it was so much easier to leverage Kafka or Yarn because they were already part of the Apache ecosystem than to have to build them out yourself.

Yi Pan 00:18:35 Correct, yeah.

Adam Conrad 00:18:36 Got it. But the streaming or the actual processing part of the application is done through the Samza API. So I’m guessing when we look at the entire sort of three core system, the Samza API itself is the most core and central to this actual Samza app project, right? This is the area that has the most unique code compared to the other layers?

Yi Pan 00:18:56 Yeah. So, one, you know API depending on, well, in my mind then, when we talk about stream processing APIs in this case, then it will involve not only the Io wrapper, that Io connector that read, write front of popup system, and also like, should include the job checkpoint system, the state store system as well. Because in stream processing, without a checkpointing and without a state store management system, you cannot really achieve the failure recovery and starting from and achieve the at least ones and even exact ones, semantics without checkpointing or state management.

Adam Conrad 00:19:42 Right. And so when we think about the entire project, this was actually one part that was confusing for me, was, what do I actually call Samza? Is Samza the entirety of Kafka plus Yarn and the Samza API or is Samza just the API part to the whole stream processing architecture?

Yi Pan 00:19:59 Samza actually builds a platform or framework to hide all the system level integrations with the pop-up Io system with the scheduling system and with the state source system in a set of APIs going around it. And then allows user, basically, allows user to plug in their user logic in the event loop exposed through this API.

Adam Conrad 00:20:28 Right, so the stitching layer happens all seamlessly through the API and then there’s a subset of that API that is then revealed to the consumer who actually wants to handle that data and send it through either, a container or some sort of job to schedule that for later.

Yi Pan 00:20:45 Yes, that’s right.

Adam Conrad 00:20:46 Yep. And so that’s actually a good transition point when I started looking at the Samza API, essentially everything seems to be either a job or some sort of container. So I’m very curious to see how this integrates into databases. So when we think of databases, there are things like acid guarantees, like atomicity, consistency, isolation, durability. Are there those kinds of guarantees in a system, like in a stream processing framework?

Yi Pan 00:21:12 So stream processing in terms of like at a state you know, I take it as, okay if you are comparing the state, kind of a stream process system with a database, then there’s a famous, like a stream to table duality blocks that a few, I think a few years ago the J. Kreps posted there, right? So in streaming then it is always view the table or database as an ever-changing thing, right? And then there’s, if you are thinking about asset control usually it is not in the synchronous, it’s not guaranteed in your synchronous way, right? Most of these consistency guarantee is based on the eventual consistency, or you can replace the same sequence and achieve the other potency as a final result.

Adam Conrad 00:22:11 Yeah. And actually you saying that makes a ton of sense. If this data is constantly changing there, in essence, there is no sense of consistency ever because it’s constantly changing. But like you said, I think the eventual consistency is the right thing there, because at some point, the job will end that you’ve scheduled and you’ll expecting something to look like, some kind of result at the end of that job, but while it’s processing, it’s going to be consistently changing. So that makes sense. From a consistency standpoint, are the jobs themselves atomic? Could you run them again and again and get the expected sort of the action to complete?

Yi Pan 00:22:47 Yeah, atomic is also a strong word that is, I think, only applicable to a single host or single, kind of a CPU and centralized logging system with stream processing with a Samza as a distributed stream processing platform, like as designed from beginning, atomic is not the design principle. The design principle is A. consistency, and also B. is the failure recovery with replayable potency. So what the difference is in atomicity, basically you have few operations and as you said, you have a job you want to, the job has 10 different workers, right? And each of them are doing something and you have to say all the 10 processes finish one operation in atomicity, and then close synchronize lock, and then proceed, right?

Yi Pan 00:23:47 In the eventual consistency and the distributed idempotency works is for each of these 10 processes, you can proceed at your own pace, but you keep a state saying when you fail, or when you shut down the process saying–oh, I remember process one is at step three and the process two is at step one, and then you have a vector of all those states of the whole set of processes there. Once you restore the whole process the checkpoint may always be at step zero for all the 10 processes. Then when the inputer replace all these process will need to check with their own states and say–oh, this step, it’s already implemented and it’s already applied from the previous run for me, I will skip as a no op. And then up to the point that this step that I have not exceeded in the previous run, and I continue. So then that’s the way that you achieve a replace consistency and item potency in the different system.

Adam Conrad 00:25:00 Right. And then, so that really doesn’t make sense then to think about it in terms of atomic. Because like you said, these aren’t short running jobs. These could run for several hours or several days, or just they continuously run and soÖ

Yi Pan 00:25:11 Let me just clarify a little bit on that as well. So atomicity, if you want to apply automicity you can still do that by a centralized locking system, say you apply a barrier, a centralized barrier through zookeeper or something like that. But that greatly affects the throughput. And a lot of those stream processing applications does not really work well in the case of you have to keep up with thousands or millions of incoming messages as input.

Adam Conrad 00:25:44 Right so it almost defeats the purpose then, like you could technically do it, the logging itself almost acts like a ledger then. So you could, like you said if the service gets interrupted or it goes down for a bit of time, the ledger is acting sort of like the state machine, which says, here’s the state at a given time when the services back up resume at this line at this given state. But all that extra logging is going to affect throughput. And so you almost don’t, like you’re saying there, if you needed those kind of requirements for that data, you probably wouldn’t even be doing stream processing in the first place.

Yi Pan 00:26:17 Yep, exactly.

Adam Conrad 00:26:19 Right. That makes sense. So that covers, I think the job aspect for containers, essentially it says that you can execute arbitrary code. So does that mean that security is enforced by the customer, or is it expected by the customer? How does security handle for these containers which could execute anything?

Yi Pan 00:26:39 Right. So that’s a good point. So I think for Samza applications, the word arbitrary is also slightly misleading. So it is just referring to, okay there’s a set of Java API and then just using this set of system provide APIs, then you can do whatever you want as implementing your user logic. So if the security is in the aspect of saying, are you able to, in the regular not really doing any hacky way, are you able to get beneath the API layer to get down to the system level resources and objects? Then the security’s there? Yes, there’s no exposure of the underneath system objects to the user except for there’s a message comes in, message is there. If you want to use the state store, the state store API is there, right?

Yi Pan 00:27:37 And if you want to input-output, if you want to write to any system, the producer API is there, that’s the level of security that is there. But if you’re talking about the user want, just in their code arbitrary, write some Java reflection code, try to get some system level instance through reflection, currently we don’t really enforce that. Serially in theory, we can try to enforce this level of security by some access rules or the different class loaders for system versus user space to enforce that. But that is a good question. We are still working on this, right?

Adam Conrad 00:28:19 Yes. And it’s important to point out too that because you can use Kafka right out the box, Kafka itself provides no security for those topics, so therefore, by extension, Samza doesn’t as well. So what should people be doing to ensure that their streaming code is secure? Do you have any tips or suggestions?

Yi Pan 00:28:37 So don’t do refraction, right? Don’t do refraction and always going through the Samza system API code to interact with the system and then including the Io system, the state stores and then coordination system. Right.

Adam Conrad 00:28:54 And are there validations within that API themselves, or what kind of guarantees does the, you’re saying if the Samza API is the safest way, what kind of guarantees does that API provide that helps if to ensure security?

Yi Pan 00:29:07 You mean, does the Samza API itself validate whether there’s invalid access to that?

Adam Conrad 00:29:13 Right. So the APIs themselves have certain type signatures for each function that you’re using. I’m assuming that there are security layer built, baked into all those functions.

Yi Pan 00:29:24 I don’t think there is currently.

Adam Conrad 00:29:27 Got it. And so then what benefit are you drawing from ensuring that you properly use that API then?

Yi Pan 00:29:34 So if you properly use a set of API, then the code pass is all controlled by a platform that we actually, as a Samza community member that we does not really go underneath the skin of certain objects and manipulate with the system, unauthorized system objects by default.

Adam Conrad 00:29:55 So it’s more for debugging or for if someone posts a bug to the Apache project, they can say, well, I use X, Y, Z APIs, and then there’s some sort of guarantees around trying to fix that because it’s part of the, you’re going through the ecosystem then, rather than going around it.

Yi Pan 00:30:12 Yeah, because underneath this set of APIs, then no user code. It’s all platform code.

Adam Conrad 00:30:17 Got it. Okay, great. So this is a very helpful introduction to the whole ecosystem for Samza. One really interesting thing I noticed about Apache in general is it seems to own the streaming space overall, and there are a lot of tools for streaming in the Apache ecosystem. So I’d love to take a little bit of a detour and talk more about each of those and see where Samza fits into the overall landscape of streaming. So, we’ve already talked about Kafka. Kafka itself is a streaming framework that you could use on its own. So while Samza allows you to plug into Kafka, Kafka, you don’t necessarily need to plug Samza into right? So Kafka itself, if you needed to handle streaming of data, you could use that standalone, Spark is another one. What instance would I want to use Spark for?

Yi Pan 00:31:08 So if you’re talking Spark, so Spark, historically Spark implements streaming in a micro batch way, right? So only, I think only after two four or two five, the Spark structure streaming is kind of a reinvented the whole API. So that from the API point of view, you don’t see the micro batch anymore, but on the knees there’s the execution environment for the Spark. Structural streaming is still either micro batch or there’s another way called continuous exclusion, right? And you have to switch between them. The micro batch is well adopted Spark way of doing the streaming, and the continuous execution is not yet popular yet. Right? And so that’s the Spark side thing. So if you compare Samza with Spark, then Samza is a pure streaming engine. So it the compare them with the micro batches, with the pure screening, you can do event by event stream processing and async commit with each of the events, right?

Yi Pan 00:32:17 And with the micro batch, you always need to synchronize on the batch boundary and your checkpoint also need to synchronize with the batch boundaries, right? So in, you know, in a lot of use cases that it’s not convenient, right? So that’s the short comparison between Samza and the Spark there. For the comparison between Samza and the Kafka stream, actually it is an interesting story that Kafka stream is literally for fork off from Samza. The reason being at the early discussion in the center community, there are two different schools of thinking that since Kafka is already a popular product and then there are tons of need that for small media use cases, I do not really want to stand up on my own young cluster, my zookeeper.

Yi Pan 00:33:12 So I want to have a standalone or as a library, as stream processing as a library, as my primary use case. But on the other end for example, for the logic department like, LinkedIn and at that time, Netflix and other .com big installment, having each of the different teams who develop the stream processing pipelines to run their own hardware and having many, many repeated SRE and operational effort to monitor those 24/7 jobs across the company is definitely not the way to go. So, there’s also a strong need to keep the hosted and the running stream processing as a service, as the primary offering in the standard community. So not that said, not saying that we are not, as a center committee, we do not recognize the standalone way of deploying things just the priority wise, that’s different. So, literally at that time then, Kafka wants a first party serving the small media use cases, so they spin off the standalone faster. And it took us some while to get to the due coordination mode that implementing the standalone send the standalone with zookeeper. But that’s the main difference. Like even you look at a Kafka stream, as for today, it is still as a library model, it doesn’t really provide as a service.

Adam Conrad 00:35:00 So if I’m understanding you correctly, you’d want to use Kafka Stream for these small to medium instances because it’s all baked in together for, it’s just an easier use case. Whereas if you really want to use the full, to the full extent, you’d want to integrate Kafka with Samza. So these larger customers are going to be using the distinct Kafka for it, its use Samza for its use between the streaming and the processing portions, but for these smaller use cases, they’re going to go with Kafka Stream built into Kafka.

Yi Pan 00:35:29 Yeah, I think to me is exchangeable for the smaller media cases between case stream and a Samza standalone is kind of exchangeable. Kafka Stream may have little bit lighter with it in terms of zookeeper setup and those things. Yeah.

Adam Conrad 00:35:47 Got it. But LinkedIn themselves is using the full

Yi Pan 00:35:50 LinkedIn use both actually.

Adam Conrad 00:35:52 Oh you use both? So what instances internally are you using K Stream for versusÖ

Yi Pan 00:35:58 No, no, no, not K Stream. LinkedIn use both Samza, Yarn and standalone, right? Yeah.

Adam Conrad 00:36:04 Right. Got it, okay. That makes sense. So there’s way more than just those two. So we’ve covered Spark, which is definitely more in the sort of cluster computing, big data analytics. We’ve got K Stream, which is another sort of standalone service that’s more integrated into Kafka, and it sounds like Spark, then just to wrap up that piece, Spark is probably used before you would want to use something like Samza, given you’re using batching as a batching is definitely something you’re going to be trying out before you’re trying out a processing framework. So do you find a lot of people coming from Spark and then trying out Samza, or is it just different use cases in general?

Yi Pan 00:36:41 So in the early days, Spark is, and the Samza, you know Spark and the stream side is kind of a mostly isolated silos and people who write, people who want to write bad jobs use part, and then people who want to use the Real-time analytics, the write incentive, right? But we’ve seen like since 2016 or 17, we’ve seen more and more use cases once do both, right? And that’s exactly also our journey toward, try to get a convergence API between the batch work then the stream work. And so far, our strategies we actually discover in the year of 2016 and the later 2017, Beam is a good choice for us because Beam API is stressing for basically putting a lot of rich stream process semantics and the syntax on the API, but the whole API layer is engine agnostic.

Yi Pan 00:37:54 So Beamís architecture is, there’s an API layer describing all the user logic, what you want to do with a stream processing. But underneath, you can have different runners, which is adapted layer to translate user logic to a particular engine. For example, Spark can be an engine there, Samza can be an engine there, Flink can be an engine there, and even there are more like Apex and others, right? So we feel that is a best fit for our strategy because if you compare the different engines in LinkedIn, that Spark has tons of optimization for throughput and integrated well with the batch ecosystem. Streamside, Samza has its own tons of optimization in the wrong time and integration with the nearline online ecosystem in LinkedIn.

Yi Pan 00:38:51 So there’s a huge gap if you want to replace one versus the other, yet you probably need to spend years of effort to replicate the optimizations you done on the other side. But with Beam, since the Beam application, Beam layer allows the user to just focus on the application logic, then the optimization, and also how do you run the same logic on stream versus batch environments becomes, kind of a hidden layer from the user, right? So that allows us to have, a kind of a quicker integration for the convergence use cases and allow us to advance on the optimizations for each different engines separately.

Adam Conrad 00:39:42 And this Beam layer you’re talking about, is this the same one that powers Airlink and Elixir, the concurrency languages?

Yi Pan 00:39:48 No, the Beam is Apache Beam, which is, I think is a project started in 2016. And right now it becomes the top-level Apache project already.

Adam Conrad 00:40:00 Yeah, there are just , the more I sort of unpacking this, the more you can see that there, Apache really has so many services that power this whole streaming space. And you also mentioned Flink earlier, which is more for distributed data flow or parallelization. So that sounds like it’s another system yet again, where it’s not about necessarily replacing one thing with the other, but it’s about complimenting each other through this Beam layer.

Yi Pan 00:40:23 That’s a thing that if I want to say a short comparison with the landscape, I usually categorize in four different aspects, right? So, talking about all the different state of art platforms and engines out there, one aspect is whether the API is pure streaming versus micro batch. So you can see that Flink and Samza actually is mostly, pure streaming because usually the API is the event-by-event processing allows async commit features and those things and data flow, which Google data and the Spark structure streaming mostly operated on the micro batch mode, but then that’s the API layer of difference. The implementation architectural layer of difference is there are RPC versus Chevron queues in the whole pipeline.

Yi Pan 00:41:19 So that’s if you compare all the different systems, it’s very unique. Like, for Samza, Flink, dataflow and Spark structure streaming in a single job pipeline, they all use RPC between the stages of a single pipeline, which has to be started on timeout when the communication between the operators in different stages have issues right from the upstream downstream. Samza implements a different way that it leverages the Kafka queue as the intermediate shuffling queue so instead of IPC. So if you have intermediate stage one produce something, stage two, continue process this output from of stage one, it is all communicated through a Kafka queue. So, it has advantages of, you isolate the failures in the downstream from the upstream, and you guarantee the orders of all the sequences that once you, once you materialize, once of all the events you want to process, the downstream will all be, see the same sequence there.

Yi Pan 00:42:26 The downside, of course, is added some latency and also added some position costs in the whole pipeline, right? That’s the second aspect. The third aspect is the largest state store supports. So if you compare all these then, Flink and Samza actually comes with a very comparable feature sets that it all comes with the native free offering of ROC DB based local store for large states. Spark structure streaming by default offers HDFS based state store, if you say, if you write a map with a state operator in Spark, right? The issue with that as quoted from Databricks offering on Azure, the free state store implementation on HDFS has some performance issues when the size of each state goes beyond a certain size, I forgot exact number, but I think it’s in the gigabyte range. Then in that case, you will have to purchase the ROC DB based state store, for the largest state store from Databricks as a proprietary offering, right?

Yi Pan 00:43:35 So that’s a difference, right? Whether for Flink and therefore for Samza that by free, you get the state store of the largest state store support with the ROC and in Spark, you need to pay money, right? And then last aspect I was thinking is to compare all those is a cloud integration, right? Whether you have a native integration with the public vendor as a service. So on that front, actually cloud Dataflow and the Spark streaming has a better integration as they all cloud dataflow is a native offering from Google. Spark streaming has the offering basically first class service offering from Azure, Flink and Senda mostly are freelancers, so you can just run your own cluster, run your own service by yourself. But as for now, there’s no cloud vendor-based offering for that yet, but hopefully that that’s giving an overall comparison that I has. Right?

Adam Conrad 00:44:42 Yeah. And, you know, there’s yet another project that I think stitches some of them together. One of the topics you mentioned that’s a big differentiator between the different services is whether you’re focusing on batching versus pure streaming, Apache Apex is yet another streaming tool that’s mostly around processing either streams or batches. So it sounds like if you are, you’d mentioned this earlier with Kafka that if you have Spark you, you could compliment Spark with Samza while one handles the micro batching and one handles the streaming, it sounds like Apex could be that meta processor that’s allowing either streams or batches to complete, like through this system. How would you use Apex in that sense?

Yi Pan 00:45:27 So Apex itself is an engine, so I don’t think Apex itself is API layer. What I was mentioning earlier is Apache Beam is an API layer, so it doesn’t really demand a specific engine to run beneath. And Apex, I think has a Beam runner, basically it runs the Beam program as an engine, but itself is not designed as a convergence engine, but I don’t think itself you can swapping any engine beneath that.

Adam Conrad 00:46:01 Yes. Okay, so in that sense, if you didn’t want to use Beam, your Apex could run the whole thing for you because it is itself the engine that’s powering this?

Yi Pan 00:46:10 Yes, you can choose to all in for Apex, but unfortunately I don’t think the project is currently very, very active and they, it also comes way later than, what we have demand on and we have developed.

Adam Conrad 00:46:28 Got it okay. So then just sort of rounding the quarter here in Apache streaming projects, the one that actually sounded most similar to me, you had mentioned that there’s a lot of similarity in pure streaming between Flink and Samza, but I also noticed Storm sounded very similar as well too, because that handles stream processing computation. So I’d imagine that there’s a lot of overlap with how Samza processes the jobs or the containers that are in those jobs, right? So it sounds like you might actually have to look at the comparisons.

Yi Pan 00:46:59 Storm was an earlier competitive with the Samza that we analyze. I think there are a few disadvantages we see in Storm that at the moment, like, you know stop us from investigating that. One is Stormís large topology management, like the Nimbus based large topology management is not quite reliable at the time that often causes stalling of the pipelines. And then the other is, a Storm doesn’t really come with a good large state store support with short latencies, right? So even for that effort, for that aspect, in fact sense, it was the first one integrated with the RTB by native first class support. Flink actually works with the in-memory state stores offering first, then it comes with a full off with a RV implementation storm doesn’t really have that at the moment.

Adam Conrad 00:47:58 So at this point then, Storm is not something that people are actually actively trying to compare. Like, if you’re going to choose something in the competition space, you’re going to be choosing Samza, you’re not going to have to worry about Storm at this point anymore.

Yi Pan 00:48:12 Yeah, so for us, I think in my opinion, the most currently, if I am looking at what are the top competitors in this space I’m going to be looking at Flink and then Spark Structure streaming. A Flink is in a lot of sense that the functional wise offered feature wise is very close to what Samza is offering Spark structure streaming. The main reason is Spark has a big ecosystem from the batch side, right?

Adam Conrad 00:48:46 So then how do I know which framework to choose if there’s so many to evaluate, what am I actually looking for in particular pieces to help me choose the right system for me?

Yi Pan 00:48:54 Right? So that’s from our point of view, then we always stressful performance, right? So I cannot really, I think it’s hard to come up with a general rule or reasons for people who want to choose A versus B. I can explain several reasons why Samza is adopted as a mainstream processing engine in LinkedIn, right? So from the LinkedIn perspective, the first reason is performance. So, Samza-Kafka interface is thus far the most advanced to Io connector on Kafka, among all the stream processing platforms that I’m seeing. If you look at most of the stream processing platforms, integration with Kafka is saying, each topic petition, you have to have a consumer instance for that, right? So then, this Kafka instance will feed for a task in a one in your task for processing this, in order sequence coming from a petition.

Yi Pan 00:50:02 But then, if you are, consuming from many different topic petitions, one, this analogy we’ve seen is some of the platforms that basically need to instant multiple Kafka consumer instances in the same container to consume multiple different Kafka topics and topic petitions from that. And in order to having a one-to-one mapping front there’s one input sequence, there’s one threat processing that it works with two conditions. A. If the input topic petition is relatively static set and B. the number of total member footprint of all this number of topic petitions sorry including all those number of consumer instance in a single process is not high pressure. But Samza works in a more advanced way that we actually instant a single consumer instance for the Kafka cluster even you have consumed front, even you are consuming front 10 or even a hundred of a topic petitions from this cluster.

Yi Pan 00:51:10 And then internally there’s a multiplier and also a per petition for control mechanism that we can see, there’s this multiplier will take the messages from all the top petitions send out to different thread that is working on different petitions. And if one of the threads is going slow, we just pause on this particular input petition, which, which doesn’t really pause the whole consumer instance. A lot of other systems will do, right? So that allows us to shrink kind of doing a very dense consumption model in a single consumer instance, yet to maintain a high throughput in terms of, it needs to fan out to multiple paradigms. That gives a lot of benefits in terms of like performance for Samza processing. The other advantage of cost, the integration with native integration of ROC DB as a local embedded store, and which it yields the sub seconds read right latency and the strong synchronize the read after right semantic consistency in the state store read write.

Yi Pan 00:52:24 We had an earlier performance benchmark show that Samza supports, 1.2 million requests per second on a single host in a very old host with only like a one gig nick, right? We have no reason to not believe that it can scale up with the modern hardware with 10 gig nicks and the other beefier CPU and memory. That’s the one big aspect of LinkedIn choosing Samza, right? There are also LinkedIn’s reasons for choosing Samza also is with the improved APIs with rich window and semantics. So that comes from two aspects. The in terms of the API improvements since 2016. A. We started supporting SQL. So SQL supports allows many users such as data scientists or AI engineers to write the stream process pipeline without understanding underneath system details.

Yi Pan 00:53:29 And B is, we are into with Beam APIs since the Beam Runner is the one that we build since 2016, which allows us to leverage the reach semantics of Beam APIs, specifically windowing and event time. And also there’s triggers, which is a very advanced in API and concepts in the stream processing. You can read, Taylor Akido Stream Processing 101, Stream Processing 102 to understand what is the trigger and later arrival and early arrival means, right? This also allow us to support multi-language support from Beam, which recently we have successfully advanced to support Python stream processing applications in LinkedIn, right? So that’s the API side. Those are all built on top of again, those are all built on top of a high-level API incentive, which allows you to specify the multi-stage pipelines in your single application experience deck.

Yi Pan 00:54:30 There’s much of a work that is still going on with SQL and Beam. The third aspect that I want to mention is we have actually improved on the upper ability at a scale for stream processing on Samza quite a bit. So the biggest challenge, of course, in stream processing is maintaining a large number of stream processing pipelines with strict SLAs 24/7. We have actually put a lot of effort into many aspects of that. I will only mention two here. One is we actually added advanced auto scaling capabilities in center platform. One of the biggest complaints, of course from user is, the complexity difficulty in tuning the configuration for a running pipeline. The incoming traffic fluctuates, and when you deploy the same pipeline from data center A to data center B, the traffic difference, and then the hardware may be different.

Yi Pan 00:55:30 So there’s tons of the tuning you have done in data center doesn’t work for the SMB, this is a nuance and creates a lot of pains from the user. This is especially true for SQL users, since SQL user mostly are not familiar with their operational details of physical system. So since we have put a lot of effort in providing tools and system to help monitoring and auto scale the jobs so our users won’t need to carry the operational overhead. We, our recent success in this effort, you can find it in a paper in Hot Cloud 20, the title is “Auto-scaling for Stream Processing Applications at LinkedIn.” We actually deployed the auto scaling system together with our larger scale operational pipeline in LinkedIn and achieve the great performance and also operational cost savings.

Yi Pan 00:56:29 And we view it as an important differentiator of our technology. The second aspect I want to mention in this operability improvement is the largest state failure recovery and resilient. So as I explained earlier, Samza ROC DB for local states and Flink follows and even Spark structure streaming when you need to have a large states. It also provides the ROC DB as the local storage. It provides fast access in the healthy state of the job. But Samza uses Kafka topic as a change lock to recover the state score in failure. And this is heavily tuned toward the healthy high throughput in runtime. But the recovery from the Kafka topic is relatively slow. So we actually implemented two solutions to fasten the estate recovery already. One is host affinity in Yarn. So we allow the job to schedule the container back to the same host in Yarn.

Yi Pan 00:57:32 If the host is still available, then we can leverage whatever the local snapshot that is that exists on the Yarn host and fasten the recovery. This actually in production achieves 50 times or 20 times faster recovery. And the other is, to battle against the case that the host is totally gone. So it’s called hot standby container. We have implemented a way that you can stand up hot standby container in another hose, which keeps the state in sync, and immediately take over the work from the failed container if the machine is critical. That is allowing us to operate in the range that for patient critical jobs, that you cannot really suffer any downtime. There are works still in progress to improve the state recovery in case of a host failures going on.

Yi Pan 00:58:27 Try to strike a balance between the cost you pay in having many standby hosts, standby containers versus the time you take to recover. So that’s the third aspect of that and just want to, this is set of reasons that we choose Samza as the runtime platform in LinkedIn, right? There are examples of the Samza users like eBay, Ruffin, TripAdvisor, Optimizely, which is a marketing analysis company, Slack, and those companies all think most of the use cases chooses Samza because of the above reason.

Adam Conrad 00:59:09 Great. And we’ll link to all this documentation on the show notes. So you’ll be able to see the Samza official website, the Twitter, the link to other resources at awesome streaming, as well as the paper you referenced earlier, Auto- scaling for Stream Processing at LinkedIn. So we’re basically going to wrap things up. Could you just summarize really quick, you know, when someone’s ready to make the dive into Samza, what kind of things, what sort of parting advice could you give to folks they’re looking to get started with streaming stream processing or Samza in general?

Yi Pan 00:59:40 So I think first of all, when you are looking into a stream process platform, of course I think you need to understand, in this domain usually the throughput fast performance and consistency is a big trade off. So, just keep in mind that any distributed streaming platform that you may need to pay you need to look for those trade-offs between that and if you’re specifically looking for comparison between the stream processing platforms, I think as I mentioned earlier, then API the four aspects I will recommend is look at API see whether it’s pure streaming or micro batch, and look back to your use case, whether you can take the batch way micro batch way of writing your logic, or you have to do a full streamway because there’s some logic that is hard to align the logic with the batch boundary, for example, the section windows and all the things, right?

Yi Pan 01:00:57 And then the other aspect is for the whole pipeline that do how much of a consistent regarding to, atomicity or not atomicity, but most of the snapshot, consistent snapshot or exact ones you want to achieve. If you want a strong exact ones across the whole pipeline, then you may pay the additional cost of synchronization of the estates in different stages, which RPC is probably give you a better options here. But if you are going with, I do not want to sacrifice my performance because of that, I want to have an eventual consistent way and being able to isolate different stage of failures without impacting other, then a persistent shuffling queue may help to solve your problem, right? In between the stages of a pipeline, the size of a state store is another big problem.

Yi Pan 01:01:59 If you’re doing a stateful stream processing, then I would recommend that if the sizes are big, then look for a ROC DB solution. Yeah, mostly is that, and lastly, on the cloud vendor integration side, then it is up to your appetite. Do you want to have your own installation and have to run a stream processing cluster, or you can leverage some existing cloud vendor offerings. That’s just to start with, there are a lot more details to evaluate.

Adam Conrad 01:02:37 Perfect. Well Yi, we’re just about out of time. Thank you so much for coming on Software Engineering Radio today.

Yi Pan 01:02:43 No problem. Thanks a lot, Adam.

[End of Audio]

SE Radio theme: “Broken Reality” by Kevin MacLeod (incompetech.com — Licensed under Creative Commons: By Attribution 3.0)