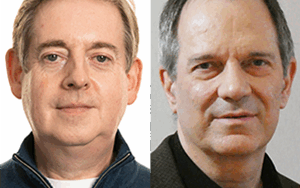

Aaron Rinehard, CTO of Verica and author, discusses security chaos engineering (SCE) and how it can be used to enhance security in modern application architectures. Host Justin Beyer spoke with Rinehard about how SCE fits into the overall chaos engineering discipline, examining how SCE compares to traditional security approaches, how it can benefit compliance, and how it can be used to improve observability of applications and identify gaps before real incidents occur. They also discussed how to develop SCE experiments, how it can be brought into an organization, and some decisions that should be made around tooling for running security chaos experiments.

Show Notes

Related Links

- Security Chaos Engineering Report – Kelly Shortridge and Aaron Rinehart

- Chaos Engineering – Casey Rosenthal and Nora Jones

- ChaoSlingr

- Security Chaos Engineering for Cloud Services

- Applied Security Presentation at Conf42 – Aaron Rinehart and Jamie Dicken

- The Human Side of Cybersecurity Seminar Series: David Woods and Adaptive Systems

- https://twitter.com/aaronrinehart

Transcript

Transcript brought to you by IEEE Software

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected].

Justin Beyer 00:00:16 This is software engineering radio, the podcast for professional developers on the web. SE radio is brought to you by the IEEE Computer Society.Oh, this is Justin buyer for software engineering radio. And today I’m speaking with Aaron Rinehart, Aaron is expanding the possibilities of chaos engineering to cybersecurity. He began pioneering security and chaos engineering as chief security architect at United health group UHG while at UHD Reinhardt released chaos Slinger, Reinhardt recently founded a chaos engineering startup called Baraka with Casey Rosenthal from Netflix and as a frequent author consultant and speaker in the space. Welcome to the show, Aaron.

Aaron Rinehart 00:00:43 Thanks for having me.

Justin Beyer 00:00:44 Of course. So just for our listeners, if you’re not familiar at all with chaos engineering, I want to refer you back to episode 3 25, where we discuss chaos engineering with Tammy Patel. But Aaron, just to start off the episode and as a quick refresher, can you kind of give us a quick overview of the goals of chaos engineering as an overall discipline and then a little bit into how see security, chaos engineering fits into that overall?

Aaron Rinehart 00:01:06 Sure. You know, I like, um, I, there’s a Netflix definition of the original definition of chaos engineering, but because I can kind of start with my definition, it’s just, it’s not, it’s just out of the way I like to interpret it for people. It’s the idea of proactively introducing turbulent conditions and do a distributed system to try to determine the conditions by which a system or service will fail before it actually fails. So the fundamental premise of the problem is, is that we’re learning about how our systems actually work through surprises and it wasn’t a surprise meeting an outage or an incident we should’ve would’ve fixed it. Right? Cause we would’ve known about the problem. Right? Well, we didn’t know about the problem so we can fix it. So it was, it’s a surprise, but the surprises come at a cost that’s customer as customer pain. Uh, and he asked engineering kind of allows you to be proactive about that surface, that failure before it manifests in the customer pain. So it’s not about causing gas, it’s about creating order.

Justin Beyer 00:02:02 Yeah. It’s almost that controlled detonation in your environment to ensure you’re not creating an unsafe environment. You already know that there’s hidden things, but let’s find them before they come out and surprise you precisely

Aaron Rinehart 00:02:16 Precisely. Can

Justin Beyer 00:02:17 You kind of discuss what the goal of security, chaos engineering is within that overall chaos engineering concept?

Aaron Rinehart 00:02:24 Well, it’s kind of the same. So I mean, so throughout my career, I’ve been a builder, a bit of builder most of my career before I got on the security. And so you can never really kind of lie to me how things are built. I kind of know things are just, you know, uh, barely put together, you know, cause I mean, that’s, I mean for software, it’s various a process of that didn’t work that didn’t work, uh, avoiding that kind of works, you know, it’s, you know, it’s not a, it’s a messy, very messy thing, but it’s like, that’s, that’s how, I mean the whole camp back explore expanded to track, right? It’s like, you know, exploration and experimentation core part of building things, you know? And um, so I’ve always looked at security as an engineering problem. In my, from my perspective, my opinion is still is an engineering problem and always has been.

Aaron Rinehart 00:03:05 So what I look at what I saw chaos engineering, like, uh, it made so much sense to me, uh, as a security practitioner, like, you know, it’s not security is not a separate part of the system. It’s the system is either secure or it’s not. So, uh, well the fundamental problems that we’re trying to solve with chaos engineering security is the, is, is really the builder, the builder, um, security and the build process, the build process. But the, the, um, so what happens is, is that, uh, systems need a certain amount of flexibility for a builder to change something, right? So that need to be flexible and constantly changed. Something comes up against, uh, the fact that security needs context in order to be effective, right? So what if you change something and you’re constantly changing it, the security mechanism of our practice has to change with it.

Aaron Rinehart 00:03:52 The problem is, is that that’s not happening. Uh, and, uh, you, you put that context of this problem set in a world of high speed and high scale. Um, you know, the problem just bifurcates, right? It just goes into different sections. And what we’re doing with Kasey can, she gets into there for security is really the same sort of thing we do for availability in general, brittleness of a system is we’re asking the system a question, right? I’ve Hey system, or Hey security, I believe on a condition X, X occurs your tasks to do Y now when X occurs, there’s why it actually happened. Right? And it’s, it’s, I’ll tell you what I have yet to ever see a chaos engineering experiment of fill-ability stability or security actually succeed the first time, who is almost always wrong about what we think the system actually.

Justin Beyer 00:04:44 Exactly. And that’s the whole point of actually doing the testing. And I mean, as you mentioned, a lot of systems end up being put together with, you know, a little bit of duct tape and a little bit of bubblegum to stand them up. And the problem is that, you know, when you look at traditional security tools like a WEF, for example, a web application firewall, you know, the most basic of them all is rejects. You can’t keep that and actually grow that with a rapidly developing application because no application developer wants to write 500 WEF rules. Every time they roll out an new feature to some end point, but kind of going down this track of, you know, where SC and traditional security are kind of butting heads. Most of our listeners are really software engineers and they’re not necessarily in the security world and that’s not where they necessarily live. So I want to kind of discuss what some of the traditional information security goals and methods are and why they don’t work in agile development environments. For example, when you look at something core concept wise as like the CIA triad in security.

Aaron Rinehart 00:05:50 So let me see, what do I want to start with this? So, um, I, um, just, just for context, I was a software engineer of my career, right? So I pride myself. If, if identity, if I identify as anything, it’s a software engineer, probably just as much as a security person, a person professional engineer, I guess, whatever. Um, but it’s, so where does this sort of, what is the, um, what are the traditional contexts that security, chaos engineering comes up against? And so just to be clear, none of the other, uh, testing methodologies or instrumentation methods for security, I’m not saying you shouldn’t do any of those. You still need to do pen testing. You still need to do scanning, right? You still need to do scanning for static pro uh, issues in code you need, uh, you need to still do runtime based assessments.

Aaron Rinehart 00:06:37 The problem that we’re dealing with is the post-appointment complexity issue, right? Is that it’s not so much the build process we’re really addressing with, there are other methodologies that are already addressing those problems, what we’re dealing with, the sheer amount of complexity and the post-appointment, for example, let’s take a 10 service healthcare application, right? So you’ve got RX, uh, you got RX service, you got claim service debt, 10 services, right. We don’t actually probably have 10. Right? Well, let’s keep in mind. You probably have a different set of humans for every service. Right. Uh, and they’re probably seen maybe on the same schedule, maybe not, but close. Right. Uh, and you probably coming back to you, don’t just have 10 probably cause for throughput reasons, you might have five to 10 of each, right. That’s already, you’re looking at 50 to a hundred, right? What have you started yet?

Aaron Rinehart 00:07:24 Like microservices are interdependent. They’re not meaning that they’re not independent, they’re dependent upon other services for functionality and, and, um, a tough rate while sometimes you need to have older versions of extras running to support other services. So you’ll have some over version running. And, you know, if, uh, if you, you know, you might be rolling, uh, some blue-green type of deployments, right? So you might have some newer versions, right? So already talking about two to three microservices, you haven’t started adding any weird complexity to this problem. Right. It’s very difficult for humans to mentally model that behavior. Right. Like, but think about these humans were constant releasing and changing things. Like, are we all aware of what all the, uh, how, how all the context of the system is changing on a constant basis? We’re not like this system is slowly drifted with city Decker calls solely drifting into a state of unknown.

Aaron Rinehart 00:08:13 Right. Is that, you know, and we don’t find out what, we didn’t know what was really happening in that system until we have an outage or an incident, you know, and that that’s painful to customers. Well, we don’t have to, we don’t have to encounter these problems per scent. Right. Chaos engineering is a corrective methodology that, so that coming back to the security question, right. So what we’re doing is we’re, uh, let’s say we have containers, uh, you know, Docker container are proven that these type of security solutions, um, you know, uh, like Aqua Twistlock, capsulate, whatever, whatever you want to pick, um, I’m not picking on anybody. Right. But like, um, D does that solution, all the other testing that you put in place is all that is like the whole goal we’re trying to is like, did all that mean? And he said, right, like we did all that testing, put all these controls in place.

Aaron Rinehart 00:09:00 We put all these firewalls. So these, uh, change configuration management type of detection tools, like do those things actually function when they’re needed. Right? Because finding out during a security incident or a breach is not a very effective, uh, you know, way of detecting that. Right. It’s hard. It’s hard because what happens is, is like, you know, uh, the security incident response process is very subjective, right? No matter how much money you spend on security or how many people you hire, you have the best and brightest, you still don’t know a lot. You don’t know when it’s going to happen, why it’s happening. Who’s trying to get in how they’re going to get in. You’re hoping, hoping all the things you put into place actually function where they’re supposed to function and engineers. This is something I love to say is like engineers, no matter what discipline of engineering it is, but they don’t believe in hope or luck.

Aaron Rinehart 00:09:49 Right. We built it, it works or it doesn’t and instrumentation, uh, tells us otherwise. And the data we get from instrumentation, it’s the feedback, but which by which we build context to improve what we built, uh, and that’s what we’re trying to, to for security. So for example, I have a firewall in place that the fire will actually detect a block misconfiguration, poor change, right? Like I’m solving that problem for 35 years. Right. Like, you know, uh, there’s no reason why that should have been, did the firewall provide good context to the, to the incident response process, right? Like, did it throw in a word that they couldn’t even read? Well, I think really drives me nuts is like when, uh, when the alerts are, when log data doesn’t make sense to a human, like we forgotten that was the point to begin with, right? Like it’s supposed to tell us what went wrong, right? Like, so we have the context and awareness to fix it,

Justin Beyer 00:10:41 Actually having your system that spits out 500 Jaison objects, every 20 seconds, whenever a user hits any end point on the service, isn’t going to provide you any useful security context, unless you’re looking for very specific key activity indicators within that. And as we’ve kind of alluded to throughout the show, when you start piling on 5, 10, 15, 20 controls, because you have a little bit of hip over here and a little bit of PCI over here. So you went checkbox security and went down the list and said, well, I have file integrity monitoring. I have a firewall that blocks it. If that doesn’t all come together somewhere, it doesn’t help. So how does SCE help fix some of that?

Aaron Rinehart 00:11:26 What it does is it really helps us kind of, uh, instrument that, that ecosystem, we’re not talking about post appointment. It asks the question. I’m honest to God. I really started doing this years ago, United health group. I mean, this is after I sort of was part of leading the dev ops transformation there and the COVID transformation, very new to AWS at the time I’ve been through cloud transformation before I just knew the kind of problem sets I was coming up against them. Like when I saw this thing that, uh, we hired a first SRE, uh, and he started talking about this thing called chaos engineering. And I’m like, oh, I think, I think this can help me. I think this can help me with my problem. My problem is the chief security architect United health group was, is that people would come to me with like, you’d have a master data architect and a solutions architect for an application come to me with two different diagrams.

Aaron Rinehart 00:12:10 So the same system, it’s like it represent their mental model in their context of how they view the system while, um, you know, I, it’s hard for me to design good security, but I don’t have an accurate reflection of what the system actually is. It was always concerned. I’m not very passionate about my work, very passionate about the craft. Like I want my work to be effective. I want it to be helpful. I want to add value. And, uh, I was never sure, right. If like I actually had the right information or my guidance I gave them was actually correct because security placement configuration of security controls truly matters. Remember security controls are context dependent. And so what I needed a way to do a skip through all the humans and a process and ask the computer questions, right. I said, Hey, you know, when X condition occurs, right.

Aaron Rinehart 00:12:55 When S3 bucket is launched, uh, without encryption are, I are with the wrong kind of encryption or like, or a misconfigure port is, uh, happens. Like, are we detecting that? Right? Like, it’s a very simple things, you know, I’ve actually thought all chaos experiments, like is kind of like what they say about martial arts, right. Is that like, if you get one move, right. And you’re like an expert at one move, like, you know, like you’re almost a touchable. It’s kinda the same thing with CAS engineering. I felt is like, like you get one simple experiment, you could run so many times different environments. It tells you all different kinds of things. Right? I mean, like, you can look at the observability, which in my opinion is still one of the biggest unsolved problems that security is a software security, observability is horrific. Right. Um, and you know, there’s not a whole lot of people approaching that problem, but with chaos engineering, it’s one way to help you find out like how helpful, like there’s a, when I first started doing this, uh, there’s a woman at Pinterest she’s moved on since then.

Aaron Rinehart 00:13:46 Her name is prima. Ronnie. She actually wrote one of the use cases in the book. And then around the book, she started taking, uh, security S experiments, uh, and started turning off Microsoft, uh, logging on microservices. Their assumption was is that they immediately detect that kind of behavior. Uh, and it would be a non issue. Why could they be able to fix it, respond to it? Well, they did, you know, and I argued probably most people out here wouldn’t either, uh, because, uh, most people look at log volume. They don’t look at individual log logging services, you know? Uh, I thought that was interesting. Yeah.

Justin Beyer 00:14:16 It’s almost that. And we’re going to kind of cover this a little bit later and how you’re designing your SCE experiments and your processes. But as you kind of mentioned just early on there with the architecture conversation, you can have six architects in a room and they all might have generally the same diagram, but that’s not going to reflect reality when it gets actually deploy data Ws. And then you can use the AWS tools and think that you’re detecting every time somebody puts up an S3 bucket that’s public, but maybe you’re only detecting if they initially deployed it and made it public, but not if they changed it later. Or if someone went in after the fact and put up another service that had public access and was copying data over between the S3 buckets. And there’s so many different rabbit holes that you can go down with that whole concept. And we’re going to discuss that a little bit later when we talk about threat modeling with SC, but I kind of want to go back to your point there with observability in security. How does SCE provide a little bit better observability and even some better metrics around information security?

Aaron Rinehart 00:15:18 Um, I mean, so to keep in mind, like, I am still, uh, what are the contexts I’m giving everyone out here is like, you know, just I’ll start at the beginning of this whole, this whole, this whole, uh, expansion of chaos engineering security. So I’m, don’t think these are the bounds by which you can only do this. I’m still learning. I’ll other companies have been applying it. I’m like, wow, why don’t you tell me that I would love to learn more about that. Like anyways, so some of the ways that, like I found, uh, so observability and metrics, right? Uh, some, some, some, uh, some cool nuggets I found using it for those things is, um, observability, right? Like, because it’s, pro-life, cause we’re proactively introducing an objective signal into the subjective process of instant response per se. Like we’re not waiting for an event to occur, right.

Aaron Rinehart 00:16:03 Or proactively introducing a controlled condition is a system that we’re watching it. Right. Like we can, we can start looking at the technology because, so when you’re responding to an event, there is no time to look at the quality of the law. Right. People are probably freaking out, but not sure if this is the one event, uh, you know, and, you know, uh, people are the most companies they’d argue with the 90% range, uh, are worried about the name, blame, shame game. That’s what I, that’s why I like to call it. It’s like, what about, oh, that was, was that me that caused it? Am I going to be blamed for it? Like, and is my team going to take the hit, you know, whatever, right. Like, or am I gonna be fired? Right. Like, you know, um, you know, but, uh, like when, like when there’s, this turn out is going on, the war room comes on, it’s about getting things back up and running in, in business continuity.

Aaron Rinehart 00:16:52 It’s not about like what went wrong. So we don’t do chaos engineering during that exercise. Right. Uh, what we do is when there is no fire, right? We do it proactively. And because we’re trying to try to introduce control conditions system to see, did the log events make sense for that misconfigured firewall then S3 bucket are, are, are that, uh, that vulnerable container that was launched or vulnerable image, whatever it was like, did the things work? And if they didn’t, did it give us context that form of dogs and alerts are Donald spot likes to call the footsteps in the sand, right? Like, you know, which are the log events, the tracing and the observability, right. Uh, did it tell us what, what it needed, what we needed to know, right. Liked it to do something about it. And, but when there’s an act of war room, it’s too late, you’re trying to piece together threads, uh, and try to figure things out.

Aaron Rinehart 00:17:45 That’s not a time to do that. What we’re able to do is figure out our gaps are we’re where visibility gaps are trying to understand it proactively. So God forbid, even if an event that’s different than this, this, this construct we’re injecting, um, we can we’ll have, uh, we may have better observability for that control that we found. We had a gap on, you know, and that that’s where the original can we help with the observability. Cause what is observability, right. It’s the idea of understanding how your system works internally through its external outputs, right? So like, if you can’t understand the outputs, like, you know, it’s not helpful. Right. And what really drives me nuts as an engineer, uh, is that, um, you have all these, like, you know, I’m not going to pick on any security tooling or any, or any, or any log vendor, all your vendors out there, but there are, there are vendors out there with the AI and ML, special sauce will say, you give me all of your alerts.

Aaron Rinehart 00:18:33 I’ll predict the next out into their outage. That, that, that, that is an erosion of a fundamental premise and softwares that guess who writes log events, software engineers, right. What does software engineers prioritize features, right? Like we were a lot. What about other log events that don’t exist? Right. What about you? Does that make sense? There’s a lot of data and context that you don’t have. So like my point is, is like we can find out some of these things find out these gaps increase our visibility and, and, you know, as a result, better security. So that’s just observability piece of Destin. Like some of the other metrics and measurement that I have found useful. I know the folks at capital one and Cardinal health also had been using it for that. It’s like, because we’re causing these events proactively, we now have a starting point, right.

Aaron Rinehart 00:19:19 To start saying, like, for instance, response, for example, you can see, do we have enough people on call for that event? Did they end up, did the tools were correctly, right? How long did it take us to capture that? How long did it take the control, the quarantine, it like all these things like we can now, because the hard part before is like, we’re waiting for something to happen and then timing it. But just because you caught it, that doesn’t mean it wasn’t a cascading or compounding event. We really ever account for that insecurity that it’s a compounding thing or a cascading type of failure in a large-scale distributed system. It almost always is.

Justin Beyer 00:19:52 Yeah. We look at something as simple as a DNS issue, just from a service side of things and a service availability side of things, and that can create a cascading issue everywhere. And that can even hamper your response to it because let’s say you’re using some type of proxy to access your services. So your engineers don’t have to directly access them because you wanted to implement, you know, privileged identity management. Well, that probably relies on DNS a little bit to figure out what systems it needs to connect to. And if that’s down, you’re going to have some problems there,

Aaron Rinehart 00:20:22 DNS, you know, DNS, certs. I mean, those, those, you know, those to go all day for CAS experiences.

Justin Beyer 00:20:28 Exactly. And just a couple more things before we start diving into designing these things, where do you think that SCE should fit into your security architecture, your security engineering processes, or should it be more melded into the software development side of the house?

Aaron Rinehart 00:20:46 I’ll tell you where I’m seeing it,

Justin Beyer 00:20:47 You know, because I sort of kicked off a lot of this. Um, let me and my co-founder co-founder, uh, co-author of the rally book, Kelly, Kelly, Shortridge, she’s been a huge advocate of it for a long time and, you know, um, we’re in the process, um, you know, and who, uh, I, I find it a lot of times it makes sense to me because a lot of people out of where, where it’s originating and companies, it’s the architecture function. Uh, and it’s like, okay, well, yeah. All right. Um, you know, uh, that makes sense. That’s where I started with it. Um, but also I’m seeing it, um, you know, on the product teams, uh, the there’s a lot of growth in people using it for containers. Right. Um, and that has nothing to do with Aqua or Twistlock or capsulate or any of the other market leading solution containers.

Justin Beyer 00:21:30 Their solutions can be a hundred percent perfect, but that doesn’t mean they’re always tuned correctly. They’re always, they’re always like, up-to-date, they’re always that, that, uh, that, uh, that a port hasn’t been blocked so they can download the manifest to identify a new, a new problem. Right. Like, um, that doesn’t mean the customer is always deploying it correctly is what I’m saying. Or it’s always that today, you know, um, if those are some areas where I’m seeing it, all right, so it’s integrated more into the architecture function, which definitely makes sense. If you think about, even from the architecture concepts of like designing formal proofs for an architecture or doing modeling to prove that a system works. If you look at more of the safety, critical side of software engineering, but in like a normal organization, is this something that you would want to maybe integrate into a security champions program if you’re trying to have something like that in your organization?

Aaron Rinehart 00:22:24 You know, um, I think it depends upon the security champions program, but like, I know Twilio is doing some work with that. Uh, the, uh, of your did some writing on the book about it. I think so we’re writing actually Kelly and I, the process of writing a big animal book for rally, um, on, so that’s a heads up for the end of this next year, but, uh, there’ll be more riding on that in there. So architecture is, is in particular, in a state of challenge, in my opinion, with their Stu large-scale distributed systems and, you know, um, and how we do security. Um, so I’m a bit of a conflict on how, how I see architecture anymore. Um, but, um, so it’s useful. It’s useful for the instant response. I see a lot of instruments. Uh, its response seems to be a lot of value from it.

Aaron Rinehart 00:23:06 I see a lot of people, um, you know, threat hunting type of teams utilizing it, uh, to try to, you know, cause, you know, you could chase threats all day, but like, it’s kinda like, what do you have the ability to control? I know what I built. Okay. Does that stuff work? Okay. Does it work right? Because you can chase threats, but like, you know, or you can hunt for them, but like it’s kinda like the, I still the idea of deception engineering, right? Like this is a huge thing I have a conflict with, I think said you for security enables deception engineering. So that’d be like honeypots and things like that. Honeypots like, in my opinion, add additional overhead as an engineer. Okay. So I have to manage my security of my application or my product and then this other ad, this other vulnerable part of it to try to catch it, attack it.

Aaron Rinehart 00:23:49 Right. Well, it’s like, no, now I have to manage X plus one, right. Instead of X. Right. So it’s like, you know, like it’s about folks. So we’re a lot of the problem. The CAS engineer for security addresses is, you know, most malware, most like malicious code is trying, it needs some kind of low hanging fruit to, to succeed. Right. It’s like a misconfiguration or like, you know, the, the idea is, is that we’re trying to inject those conditions in the system to ensure what we had is actually contested before an attacker can, uh, exploit that, that simple thing. Right. Uh, and cause if you look at most of the breaches, this is not advanced stuff that’s happening. Right. It’s very simple things. And it’s really, I don’t put the finger at anybody. It’s it’s the crux of the problem is the speed and scale. And that problem I described before where a builder has to build to change something, the security has to work security controls have to respond to it. And it’s, it’s hard for humans to keep track of all those pieces at the same time. So what we need is a way to constantly check it’s, something’s not slipping through the cracks.

Justin Beyer 00:24:53 Yeah. And that’s definitely something we’re going to cover a little bit later after we discuss designing experience, making sure that you’re automating these things. So it’s a continual evaluation of it. But one thing you kind of mentioned in there is, you know, honeypots I, for our listeners, they may not be super familiar with that. But the concept is that it’s a semi vulnerable system that you’re intentionally trying to attract an attacker to get on so that you can either gather their techniques or do something to that effect or at least detect that somebody’s on your network. That shouldn’t be there or as poking around somewhere, they shouldn’t be. But I used to make the joke of honeypots, you know, how do I sell to an executive? I’m going to put something on our network that I know is going to get hacked or that I want to get hacked.

Justin Beyer 00:25:38 It’s a hard concept to sell to a non-security person. And as you mentioned now, you’re adding a whole other system that you have to now test for, with your security, chaos engineering, to verify that it doesn’t have some type of issue at the underlying kernel level that might allow them to gain access underneath the actual container or what have you, but kind of changing directions here. I want to move into actually designing your experiments for security, chaos engineering. And we’ve alluded to it throughout the episode, but just starting from the bare minimum, how do I pick which applications I should be doing this to? Is it only my business critical apps? Or should it be every single service that I have in my network?

Aaron Rinehart 00:26:22 I would, I would focus on what keeps you up at night, right? Like not only what keeps you up at night, but what do you depend upon? Cause chaos experiments in general, right? What you’re trying to do is you’re, um, uh, you do your only excluded cast experiment you think is going to be successful, right? You do not inject a failure. You know, you’re not going to catch, what’s the point in that, you know, it’s broken, go fix it. What we’re, what we’re doing is we’re, we’re assessing something. We think it’s true without a doubt in our minds, right? It’s something we depend upon security insecurity. We depend heavily on firewalls. We have for a long time, right there, the nice cozy thing in the corner that like that thing doesn’t work, you know? Cause I mean, distributed systems, I mean, come on, we’re down to like the network software and identity as building constructs anymore, you know, and itself we’re still taking over those other pieces.

Aaron Rinehart 00:27:14 But anyway, so like that’s why I started with cast Slinger. That’s the open source tool that we wrote at United health group to assess the security of our AWS instances. Uh, and you know, um, I guess I’m getting a bit into design here with this. Um, but, uh, is, is that, um, we really counted on firewall, right? Like, uh, and we, um, so our assumption was what we wrote this, this initial experiment called ports linear, what it did, was it proactively on any, uh, security group? It would introduce a misconfigured or unauthorized port change. Right. Uh, and it would introduce it and then would a port that was either not open or closed and open it or close it. You know, our assumption was that without a doubt in our minds, the firewalls would detect it and walk it every time. Right. And actually opened my eyes was, is we started doing this on our non-commercial and commercial software environments.

Aaron Rinehart 00:28:06 They only happen 60% of the time. And I was like, whoa, whoa, this is huge. Right? Like, cause we really, we really expected this to be like our saving grace. Right. I, it wasn’t, but what was even cooler that was the first day we were losing cooler was the, uh, cognitive configuration management will kind of bloated every time. Right. It’s like, whoa, like this thing where I am paying for really like is more effective than this expensive, fancy firewall solution. And it wasn’t a firewall solutions problem. It was, it was a drift issue. Drift is a real problem in all aspects of software, you know, anyway. So I guess I can finish out the rest of that, but like, you know, we just started learning out like, you know, all these things be dependent upon, uh, you know, weren’t as effective or morphing to actually what was really cool and really cool.

Aaron Rinehart 00:28:52 They also on, on this chain of events, like that example, I explain a lot because this was the main, this is the main starting point for me with chaos engineering for security was this simple assumption. Right. Uh, and we, we learned so many different things, running it on AWS environments. Uh, but like, uh, the third thing we expect to happen okay, was that they, both of those controls with the Amazon environments with give us good log data, right. To correlate an event, I was pretty much sure that wasn’t going to happen. Right. Cause we had our own homegrown solution for doing that. We built our own security monitoring kind of solution through logging. It that’s a big data lake. Right. Uh, and we, anyway, it actually thought it worked. I was like, that’s cool. It worked. But then when a SOC got the alert, they got the alert.

Aaron Rinehart 00:29:37 No like, uh, what did was the environment’s complicated come from? And they’re like, um, you know, we were very new to AWS. Remember that like, and they’re like, uh, well, as an engineer, you can, you can think this through, you can pick them up back that P address and forgot where it came from. Well, there’s something called ESnet, which hides the source source IP address potentially. Right. So it could take 15 minutes, 30 minutes, three hours. Right. Uh, that’s very expensive when you, when women, the downtime a million dollars, right. Uh, that could be very expensive, but we’ve learned all these things proactively without, um, without incurring all that pain. I mean, all we had to do was add metadata, uh, uh, a bit of data point or to the, to the alert. Uh, and we, we knew what incidents that came from.

Justin Beyer 00:30:20 Yeah. Anyone that has any experience trying to backtrack Nat will understand how painful it is to try to backtrack and S not translation. Exactly. But that kind of goes into our next point here. And you kind of mentioned, you were doing this in multiple environments. So is this something you should be testing in your production environment? Is this something you should only be doing in your QA environment if you have, that are like purely only in your development environment?

Aaron Rinehart 00:30:47 You know, honestly, like I see a lot of like large companies, like, you know, there’s obviously different levels of risks, different levels of context that security professionals are dealing with, like for a specific bank versus a cognitive company, you know, Silicon valley, you know, here’s the, here’s the crux of the problem, the drift that’s happening in QA, it’s probably happening in prod too. Right? Like, uh, so, but here’s the thing. There is a maturity curve for all chaos engineering and this applies to security, chaos engineering as well, is that you need to do really do the manual chaos engineering. Anytime you do a chaos experiment for the first time, it’s important to get people from security team, the product team, uh, you know, the, you know, maybe the product, uh, the, the engineering manager, maybe somebody from, um, in response, I get them in the same room like, Hey, do we all believe that one, we introduced some misconfigure port, I’m just going to continue with this example that we’re going to catch it and block it, because we all have been a part of this process and to, to build that and ensure that happens.

Aaron Rinehart 00:31:45 Right? So we’re all under this assumption, right? And then we actually, somebody in the room will actually introduce the key failure. Remember this isn’t like a, this isn’t, there’s something that’s security called breach and attack simulation. We’re not simulating this complex attack chain of things, right. This isn’t an attack, right. People in chaos engineering are using the word attack really scares me when they do that. We’re not attacking anything, right. Or it’s failure, injection, we’re injecting a failure condition into the system. Okay. But we always start, when we do this, this manual chaos engineering exercise, I’m talking about, it’s called a game day. We do this in a nonprofit environment, a lower environment, dev QA. We do it where the stakes are kind of low, you know, uh, but with the same level of awareness that it might be the prod we would give prod because there are a lot of stories that people do in CAS engineering that find out that they’re Devin’s, this was connected to product, right?

Aaron Rinehart 00:32:39 So like, there’s a funny story. Uh, Casey, my co-founder is a creator of chaos engineering. Uh, Netflix. They said, we’re at KC, we’re a bank. And we have real money on the line. So we can’t do cast engineering production. Right. Is that okay? Whatever. So non-profits are in staging. If it was they, what they’re going to do was the cast experiment was, is they’re going to bring down a coffin. Right. They believe that Susan brought down a Kafka nodes and another one was spent back up, zookeeper would do its thing. And then it would, it would be, uh, you know, it would fail over in the traffic and the messages would continue on. Well, um, so the one fourth actually did that, but guess what? When they brought down the Kafka, no guess what happened probably went down.

Aaron Rinehart 00:33:20 Right. They didn’t realize they still had a pointer. It was some kind of pointed that was still pointing to, uh, to dev like, but that’s the funny thing is like, so yeah. That’s why you keep the awareness of like, Hey, we’re doing this, like it’s prod. Right. But the idea is, is to get to the point of what I like to call it, the leap of faith, right? This is what I, what I call it right. Is that I believe in the tools of the controls, the methodology, by which I’m injecting failure, I’ve done it before. I’ve seen it. I trust what I’m doing. I trust that. It’s not that I patrol in the blast radius. I know where that big incidents, uh, that I understand the observability and the, the events are giving me the context. I need to make sense of what happened with the experiment.

Aaron Rinehart 00:34:01 Um, and that, um, you know, uh, you know, those sort of things, right? Like the cast engineering principles, I call them the rule sets, principles of chaos, that org. Um, but, uh, but then what’s a leap of faith is like, I trust what I’m doing. I’ve done this before. Now. It’s time to do this in production and understand how, how, how big is the problem in production and Netflix. They had a very, uh, several different ways of doing this, you know, uh, that a lot of people don’t use today. Um, but I’m like, uh, but they had something called the chaos automation platform, which would create, which kind of created, uh, a margin of safety in the system to do this all. Cause they only did when they did cast experiments, they’d do it on like 0.01, 5% of all the traffic. I do it all. They didn’t do all the traffic. They separated the traffic out to like reverse proxy. Uh, that way they could, uh, if they were wrong, they were wrong about how the system worked. They could, uh, it only affected a small subset of users.

Justin Beyer 00:35:00 Exactly. It kind of going back to the whole goal of chaos engineering, being, reducing user pain, you obviously don’t want to kill your entire production. And since, because, you know, you’re still reading a message queue on a development version of Kafka, although that would probably have other issues, but going back to kind of, um, in your book, when you discuss developing your experiments, you bring up the concept of threat modeling. And back in episode four 16, we talked to Adam show sack about threat modeling as a whole, and some of the different approaches. Can you kind of give an example of the approach you found the most useful when trying to develop the security chaos engineering

Aaron Rinehart 00:35:38 Is that value. This is a technique that I’ve used, uh, the Ulta model experiments before. Cause that’d be that tells you where your assets are, where your threats are. Your actors might be red modeling that out and have a good understanding where also where trust boundaries are in the system. It’s a great way to sort of prioritize like what controls might be. The ones that we count on the most that are the closest to, uh, the crown jewels in the system. Um, you know, and that’s a good way to prioritize controls, uh, in what you’re going to test, because you want to identify what you depend upon and what, what would, uh, uh, in the end, I guess, well, what you count on in order to provide you security, but like, well, another area that like, other than threat mind that I find extremely valuable that, um, there are only a few companies in the market really, uh, helping with this problem, like jelly or Jones, formerly of Netflix.

Aaron Rinehart 00:36:29 Um, she, uh, she’s really trying to address this problem, but like, uh, there’s this, uh, there’s a statement I forget who said it, but like past this prologue, right? Like, so what has happened in the past is likely to happen again. Right. And so like we don’t, we rarely have looked back at her incident data and I’ll tell you what, it’s a funny exercise of spend 30 minutes doing. If you go back and look at prior incident data, you’ll find it barely any, any incidents have been documented, uh, uh, other than maybe sub ones. Uh, and they’re still there. They’re hard to make sense of what actually happened because people move from war room to war room, the war room, the war room, you know, and th the documentation is poor, but like, but if you go back and look at like, what were the, what were the trends?

Aaron Rinehart 00:37:10 What were the themes? So I w what, cause other than the technology failing, right? Or the technologies be dependent upon in architecture, what, what particular things, or maybe, maybe we, we weren’t as competent as we thought at a certain capability, are we, maybe we’re not deploying it. Right. We didn’t deploy it. Right. Uh, of these other three applications prior, uh, maybe we would probably want to ensure that it’s still working. Uh, and you know, a year later in these other environments, or we have a drifted back to the same problem that we have before, because the application is designed a certain way where it doesn’t line up well with a security mechanism, right? Like, like I find it, it’s good. It’s a good mix, right? To use threat modeling and prior incident data to inform, you know, like where you should experiment and you should take both of those data into the designing of an experiment in that human process called a game day to make sure everyone’s on the same page.

Aaron Rinehart 00:38:03 Cause it’s just like the, the dev ops and the Cod transformation kind of trans that it’s like a movement when everyone in the room is wrong, we’re all wrong. And we all see it. It’s like, cause, cause do you want to point the finger at the security guys? Credit guy would support it. I hate saying developer. I’ve always hated when people call me developer. I, I prefer engineer. Right. Like, and I never say that word, but like that’s what security people will call a software. You have to call a developer. Right. And it’s like, you know, um, uh, anyway, uh, but like everybody’s pointing a finger at everybody, but you get empathy. Cause everything, empathy is a two way road. Like you really get that two way transaction, we’re all wrong. And we’re all like, Hey, we need to do something about this. It just, it levels the playing field. And it’s a great way to build culture.

Justin Beyer 00:38:48 So you’re almost kind of implementing regression testing by using that post-mortem data or at least what exists of it to inform and direct what experiments you’re doing. So that you’re preventing going back to previous issues you’ve had before and making sure that you’re actually addressing the issues you had before. And it wasn’t just a bandaid fix that was in a commit 10 years ago. And then someone deleted it because they didn’t think the line was important anymore.

Aaron Rinehart 00:39:16 Well, yeah. So just, uh, just, uh, I, I love to, I love the comment in that. So after you run a chaos experiment for the first time, right. Or you’ll find you find something that wasn’t this wasn’t right, right. And then you’ll, you’ll go back, you’ll fix it. You run the experiment again. I to ensure that, okay, yeah, we fixed it. And then our assumption about obviously how the system was supposed to work work, uh, then when one of you approve that, then it becomes what regression tests it’s like continually run that. And to ensure that over time as things to change, that it continually that the experiment is still valid, valid. So really what we’re trying to do over time, as we build these experiments, we call them verifications America. Like we’re trying, we’re trying to build a safety margin of how the system operates. Cause the biggest problem is software and post upon we had today is you don’t understand where the edge of the system is until you fall off of it. Right. And that’s, that’s not a comfortable feeling. Right. Well, what we’re trying to do is identify the edges and the points lead up to failure in the system. And you can start doing that by doing chaos engineering in a Manning managed, you know, it’s sort of automated, uh, repeated sort of way, like think of, think of it as progression testing at that point.

Justin Beyer 00:40:25 Yes. So how do you move from that singular GameDay scenario into a more continuous validation way of doing things?

Aaron Rinehart 00:40:35 You know, so this has a, this is, I don’t want to get on my, uh, sort of soapbox about Baraka, but this is what Barrick Casey and I created the company was for, is it take people from the point of like, what happens is most people get an open source tool and they’ll start, they’ll start with the manual process of getting the exercises, not realize it may take you from one month to a year and a half to get to the point where we’re producing value. Right. And you can cause you have to be able explain it to executives. You know, it just depends on the scale. You’re trying to do this at w w we tried to do is like bring that Netflix style of sophistication to the market, uh, with, with our tooling. But, uh, it takes a while. I mean, you have to build that maturity, right?

Aaron Rinehart 00:41:11 So a lot of times you’ll see product teams start with product teams and SRS while I’m sort of walk them through that game day. And then you have to, you know, the automation with automation comes to a head and it’s occurring is through a pipeline, right? The CSE pipeline is for a lot of people are executing the automation and the experiments through, and that could be using something open source. That could be, you don’t need a lot of the open source tools out there. I mean, uh, are really a reflection of just chaos kind of chaos, monkeys, original tests. Uh, but like you can, you can use bash, you can use Python, just simple scripting, a lot of people, the security casts engineering. That’s a lot of what they’re using is there, some of them are using like the chaos Slinger framework, cause there was three main, three major functions in that tool. That’s sort of either identified the target that you’re going to do the experiment on actually excuse to change tracks the change reports of what happened. Uh, and that’s, those are the kind of the, the, the basic functions needed for a security chaos experiment. But like, um, but the answer, your question, it’s the pipeline where a lot of the automation is being spun, spun off from.

Justin Beyer 00:42:11 And I do want to dive into tooling and a little bit and discuss a little bit of that selection between open source or commercial and some of the options that are out there, but can you kind of walk us through an example of some game day scenarios that would work really well for like a distributed web application.

Aaron Rinehart 00:42:31 Okay. Um, so for a security chaos experiment, let’s, let’s do that. Um, you know, uh, just, um, just in general, just from a chaos engineering experiment, you said distributed web application. So distributed systems like our fun, right? Like distributed fact of distributed systems, um, is where the problem lies, right? Like, so take Kafka, for example, Kafka bodies, every problem in distribute systems, what is Kafka’s number one, dependency, the network be reliable, right. Well, what does the number one fallacy distributed system set then network will never be reliable. So what’s happening is Kafka is constantly catching up and it’s storing things to memory and it can track. So it gets split brain issues a lot of times as well. Anyway. So, uh, a distributed web application, you can learn a lot by manipulating latency, uh, and, uh, you know, uh, in terms of, uh, uh, general Cal’s experiment.

Aaron Rinehart 00:43:26 Uh, but like for web application, let’s keep it more specific, like in terms of like a containerized solution, let’s, let’s say you use containers. Most people would probably use in containers or EMI’s um, with the container, you know, a simple experiment could be a vulnerable image, just similar vulnerable image, launching that into a criminalized cluster, uh, and timing how long it takes, how long did it take for the, this critic solution to detect it? It might detect a day one, you know, actually, um, I don’t, I may have been over a company that, that has a product that does this in many different flavors of fashions and the solutions are not actually catching it. And it’s, it sounds something like something so easy soloing for so trivial, it’s simple, Boulder will container, uh, and, and, you know, uh, you can change, you can make it a port that’s vulnerable, a deprecated piece of software.

Aaron Rinehart 00:44:15 You can get what you can get creative with it right in the container. But these solutions that are charged with detecting those capabilities from a static or one-time perspective, should it be tech should detect detected immediately? What happens if it doesn’t? Cause I’ll tell you, right? It’s, it’s somewhere between 20 to 30% actually I’ve actually found that that’s working. Uh, and, uh, it’s been an interesting thing for that. That’s not just my own experiments experience. That’s also the people doing, uh, that, uh, that I’ve reached out to me at different companies that are doing screencasts experiments with stuff is, and like I said, it’s not that the security solutions fault it’s, it’s that like, you know, a security we, we build would kind of a state of mind would not, not with the fact that a soft saltwater software sort of state less, uh, and you know, we don’t account for that.

Aaron Rinehart 00:45:00 Statelessness and there’s the drift makes that occur. But like, but knowing about it is part of the battle, right? And that’s so that watching a vulnerable image periodically, you know, you could do it from a pipeline. You could, you could, you can get creative on how you will do this, not that hard to figure out how you would build that. Uh, but like, it’ll tell you a lot about whether or not the security, because if it can’t detect something simple, it probably can’t detect something very this either. So it’s a good idea to, to just constantly keep a pulse on that. If that makes sense. As an example,

Justin Beyer 00:45:33 Essentially, you want to start with what would be generally simple problems that your deployed controls should be able to solve, move those from a manual check and into a more continuous check. And then you’re validating that that verification process is actually still working correctly and that, you know, your security solution, isn’t actually starting to drift the other way and being less detecting of it,

Aaron Rinehart 00:46:03 You bring up, are you want me to say you bring up a really good point, right? Simplicity, very simple. There is small repetitive over time, the kind of variations you get response, like are like, if you do too many complex things at once, it gets quickly. It’s very easy to understand what the heck happened, right? Like you can, you can, you’re really, it’s also a way to obsess the observability in different contexts of that control. Uh, so, but like simplicity is so key. I just, I’m glad you said that because that’s where the distinguishing features between red teaming purple teaming, breach attack, simulation, the security color wheel, right. Like, you know, I feel like I’m adding more problems at this. Like people ask me like, Aaron, what color are you going to pack a mic, no color it’s failure, rejection. It’s not breach attack simulation. It’s, I’m not slouching a Bitcoin mining attack or to no JS mass assignment. Like I’m just injecting one simple thing. I’m asking the system simple thing. Right. And that’s, that’s an important aspect.

Justin Beyer 00:47:04 And you bring up a really good point there. I mean, when you look at these larger security testing methodologies or frameworks, it really is about let’s take an attacker’s perspective and attack it and see what breaks, and then let’s see if security detects it. But as you’re kind of talking about this, it sounds like there’s a very specific, but also general set of applications that are going to benefit from implementing security, chaos engineering. Is it really like targeted to cloud applications or large distributed systems or service-oriented architectures with tons of microservices? Or is this something that you could do with, you know, a more monolithic application or more on-prem applications?

Aaron Rinehart 00:47:53 You know, I have people ask me that question. Uh, and, uh, it’s not the first time I’ve got this one. It’s just like, I I’m honest. Right? Like, uh, I haven’t done a lot in a lot of your traditional applications. I’ve be concerned and I’m gonna say you can’t do it. Right. Just like people ask the same question about chaos engineering in general. I know some of the largest banks in the world who do CAS engineering on the mainframe. Right. And it’s like, whoa, what are you doing? You know, how does that work? You know? Uh, well, like, you know, I haven’t done a whole lot on this. You’re so monolithic a three-three tier app or like, you know, um, you know, but, uh, you know, cause it’s, what is it, stability is a key aspect that you have to take into account, meaning like, uh, could I just read a ploy?

Aaron Rinehart 00:48:37 Can I kind of redeploy? And, you know, cause when I find out there’s a problem or something breaks, can I quickly fix it? Right. Uh, that’s what also counting for the blast radius. The more, our more modern systems use more of a software framework or construct for the appointment of not only the application level things, but also the infrastructure that supports it. You know, you’re able to quickly sort of, um, if there’s problem with the experiment, you able to quickly resolve it, you know, but this is typically what you should be working out before you do it in a production environment. But, um, uh, I, I can’t really comment on the monolithic stuff. Just, I, I want to say though, I’m not saying don’t do it, but I just say do it with care, but like, uh, the same problems that I’m talking about in terms of the need to change and be flexible in a security context, keeping up with that change still existed back in the three tier app. And I, my, I, I used to write, I used to write applications in the three tier app world and, you know, uh, not everybody knows what’s going on, right? Knowledge only, still resides for the few people. And the, the, you know, the system still has emergent properties that not even one.

Justin Beyer 00:49:43 Yeah. And with a monolith, it in my head at least conflicts directly with that concept of being able to control blast radius. It’s very hard in a monolith to inject some type of failure and not break everything downstream. Especially when you look at some of the more traditional modelists where it’s heavily database reliant or it’s heavily web server aligned. If you break the database that app’s going down there there’s no, if ands, buts or questions about

Aaron Rinehart 00:50:10 There’s no circuit breakers. There’s no, there’s no fancy. There’s no fancy, uh, fail overs. It’s just out.

Justin Beyer 00:50:18 Exactly. And you’re almost at that point, relying on the database product to fail over correctly for you to save your application, but kind of changing directions here, moving out of the planning. Let’s talk a little bit about tooling. I know you’ve mentioned earlier, um, with your products where that kind of fits in, but I kind of want to look at this from a very high level. How do I, as an organization select the route that I want to go for carrying these out? You know, how do I decide? Is this a, I need to go buy a commercial solution. I should use cast monkey from Netflix and just tweak it, or I should just use some Python scripts. And that’s how I’m going to do my security, chaos engineering.

Aaron Rinehart 00:50:55 I honestly think that the, so I, if I were to do this over again, well, what I was doing this, there wasn’t a whole lot of commercial tooling right through to do it. Um, especially not the security cast stuff. Uh, and you know, I would try to, I would procure a BI solution that covered some of the basic use cases, right? I mean, like there’s so many people building the same open source tools over and over and over again. It’s like, there are 10 things out there already on GitHub that already do that. Right? Like, great. That’s another one. Right? So it’s like, uh, how many Kubernetes there, like 10 different tools that kill upon, right? You really don’t need to ride. You don’t really don’t need a open-source tool to do that. Right. You could kill a pod, you know, uh, with a simple script.

Aaron Rinehart 00:51:39 Right. Um, I really, I would, I would, I mean, truly is as engineers, we gravitate towards the tooling. Right. But like, it’s more important to understand what you’re doing, uh, communicate to people why you’re doing it, the value in doing this. Uh, and you know, uh, and I would even advocate, honestly, simple Python go, you know, um, bash scripting, right. Uh, to start, right. You know, that way you kind of, you’re writing it yourself. You kind of know what you’re doing. I mean, you can model it. I mean, I honestly, you can model it off any of the open source tools. I’m at work. There’s a lot of like for AWS out there, uh, Adrian horns, he’s got a great set of, uh, scripting libraries for AWS. We use castling or the tool. I wrote that, for example, chaos experiment. I mean, really, I mean, the only they give you the framework for how to do it.

Aaron Rinehart 00:52:29 If you just read the code, usually the code I’m talking like maybe a couple hundred lines of code to do this stuff. A lot of them are Lambdas. Right. So it makes it even simpler, cheaper to run, you know? Uh, but like, but what I want you to do, what I want people to do is be aware of what you’re doing and why you’re doing it. Right. That’s more important in my opinion, then depict there, these tools aren’t that sophisticated. Um, but the commercial tools out there like are going to be, I don’t want to be biased and say this route, I felt, I feel like being a vendor, I’ve never been a vendor before. So it was like, I don’t want to say, oh, buy tool. Right? Like, uh, I it’s, you will get the most of those solutions designed to get the value quicker.

Aaron Rinehart 00:53:07 Right. Because there are certain different series studies or problems that you, after you start running casts, experiments, how do you, how do you communicate the value of the results, right. Then how do I expand a program? Right? How do I, like, how do I continue to write new experiments? And don’t just write experiments. I don’t make up failure modes. Right. That, that drives me nuts. I don’t just, I’m the kill VM, I’m the kill pot, right? Like, why are you doing that? Is that a problem you’ve seen before? Right? Is that like, you know, what are the problems we’re seeing and try to write an experiment to replays that that’s interesting stuff. Try to go focus on value. Don’t focus on just breaking things because it’s not about breaking it. It’s about fixing it. It’s about, remember we’re trying to create order. We’re not trying to create chaos.

Aaron Rinehart 00:53:50 That’s what’s so that’s why cast Casey and I, for America, we don’t even use the word chaos engineering. People already know where the roots are, rally books on it. Like they already know who we are, but it’s like, we talk about it in terms of continuous verification. Right. Cause that’s what it’s about. In our opinion, it’s about continually verifying the system works the way it’s supposed to post appointment. Um, and we find that language is a little easier sometimes because people get caught up in the chaos and there’s some companies out there just talk about breaking things to production. Just don’t do that because like appreciate you won’t have a job very long. If you go around doing

Justin Beyer 00:54:21 Exactly. And it kind of goes back to where we discussed earlier with, you know, designing your experiments, look at your previous incident data based it off of reality, because when you go and we’re going to discuss this a little bit, when we talk about integrating it into the organization, but when you go in front of an executive, you want to be able to say like we did this experiment because we’ve seen this happen 500 times and it’s had this amount of business impact and this amount of cost. So by spending the money on this, we’ve solved the problem and saved money in the future. But I do want to dive a little bit into castling and you did describe a little bit of the high level architecture, but can you dive a little deeper into how that tool actually worked?

Aaron Rinehart 00:55:02 Sure. So if you go to chaos, so casting your spelling, C H a O S L I N G R. Right? All the internet people spell it so differently. Just it’s on get hub. Uh it’s um, I’ve left United health group almost three and a half years ago or three years ago. So I, uh, I’m not there to maintain that tool. So it’s since become an internal tool, but the main tool that we use and we built a still authored, they just, now I think they’ve integrated into their pipelines. I’m not sure what they’re doing, how they’re using it, but like, um, but anyway, um, the basic framework for the tool is there. There’s all shown on there. There’s a, there’s a flow of how the experiment actually functions for ports layer. Uh, cause we’ll be open sourced it. We wanted a base example that everyone understood.

Aaron Rinehart 00:55:44 That’s where the port Slinger example came from. Is that like a software engineer, a firewall engineer, network, engineer, security engineer, a database engineer. Everybody knows what a firewall is. Right. Um, so, uh, but what it did was, so there is there, I always mess this up. I used to Amy show in was she was one of the lead engineers on helping me write the tool. Uh, she always sit and Aaron knows there’s four functions. So I always used to say, there’s three, but like there is, uh, there is, um, uh, there’s generator, there is Slinger there’s tracker and then the fourth function is documentation. Right? So, uh, uh, you know, you have to document what the experiment’s supposed to do. Um, so, um, do you have a generator? Does what is, what it is, is so it, uh, our tool, most casts engineering tools, open source ones out there are most in general have some kind of, um, opt-in opt-out functionality, right?

Aaron Rinehart 00:56:35 For us, we use AWS tags, we have reference tags, we use reference tags to be like, you know, uh, so the tool for castling was originally called poop Slinger. Right? So that’s the, I think I’ve said this on a podcast before, but like, because I mean, it was, it was a, it was a fun project. It was an off the side of our desk originally, you know, uh, and you know, even the grown adults talk about that stuff anyway. Um, no, but, uh, so what it did was is it, is it, is it, uh, it without looking for, uh, security groups with that tag, uh, and it would either open or close a port that was not already open or closed. I didn’t immediately open and close it. Right. We didn’t open it, leave it open. Right. We meet a little bit closer. We created the change behavior, right.

Aaron Rinehart 00:57:16 Uh, and so some people use a little bit differently, but that was kind of basic construct of it. Uh, but the, the tool that made the change was actually Slinger Slayer. It was the tool that actually went out and made the change and then tracker would track the change, uh, and reported for report the status of the slack. Because when we wrote it with that was easiest way, we didn’t want emails or more logs. We just want to be able to kind of understand, you know, what happened so we could address it. Um, and you know, that, that was the basis of it. And, you know, we’ve learned a lot in just that basic experiment. We were at a few other experiments where I’m on S3 buckets and I am roll collisions. You know, I, well, the most interesting areas of exploration for, uh, AWS, uh, Siri casts experiments is the fabric within the fabric. I am, I am is the ghost in the machine. And AWS, it’s one of the most difficult things to keep track of it with easiest things to cause a breach. You know, so if you’re looking for areas to experiment, it’d be less, I am is full of opportunity.

Justin Beyer 00:58:14 Yeah. Identity as a whole is, especially with modern systems. It it’s critical that you understand that. But one other question that I have for you when it comes to tooling, how should you integrate your chaos engineering tool or your security casts, engineering tools with your other security tools or development tooling,

Aaron Rinehart 00:58:33 You know, uh, there, you know, obviously we want to be able to integrate it. Um, you know, you can integrate it in with your logging solutions, um, you know, uh, Tierra, uh, Dera or whatever ticketing solution you’re using. I see a lot of people doing that, you know, um, they’re actually, I know some, uh, some companies out there that like required you to run experiments before you submit a ticket to the, the help desk for the, for the platform, like to ensure that like E the basics you’ve that you see that you’ve like, okay, you’ve done what you’ve done, everything you said you’d done is actually true. Right. Um, and I’ve seen that and people put the output into JIRA, uh, or like, you know, if experiment fails and go to tear Roddick, go through the process of remediation through JIRA, we can track it. And then, you know, you can put in a status again of like run the experiment again. Right. And then you can, you can track kind of what happened. That’s a valuable way to kind of track lineage and bio experiments and, and metrics and measurement, um, you know, uh, but, um, really the ticketing, the observability type of the tools are kind of, you know, obviously, um, you know, there are probably some overlaps into the vulnerability management, but like, uh, I don’t know how many people are doing that. Okay.

Justin Beyer 00:59:42 So essentially it’s really just around the tracking of your experiment failure or success, and then remediating that and tracking that remediation to show value.

Aaron Rinehart 00:59:51 Correct. Yeah. I mean, that’s really what we’re trying to achieve and do, you know, and obviously when the experiments fail from that, we get to that automated regression, let’s set up maturity, right. That’s also a great way to keep track of that and measure that. And, you know, I mean, it doesn’t have to be JIRA. It could be any flavor of tool in that space, but like, um, just gives us a, a capture mechanism already mechanism, a way of, uh, you know, obviously all on maturity progression.

Justin Beyer 01:00:16 Exactly. And it gives you that option. You know, if you decide that you want to break the build for a regression or something to that effect, now you can just integrate that and handle it all in one place, changing directions here, let’s talk about actually selling security, chaos engineering to your organization. If you want to adopt it, let’s say you listen to this podcast or you read the book and you say, this sounds like a great idea. Let’s do it. Who do you think should lead getting that into the organization?

Aaron Rinehart 01:00:45 I can tell you from experience what I’ve seen. Right. Like kind of just like, I mean, I could make up stuff all Dabi. So I, so I wrote a whole section on this for the like Kelly and I wrote like, there’s an extra 140 pages we wrote for that we just released. Uh, but like they got cut because it could only be 90 pages, but it’s going to be this. We have a whole section on this in the book that’s coming out this year, maybe do some articles on the tube because it’s a key thing is like, you know, how do I sell this? But I, like I said before, most people, uh, that are really it’s either, uh, SRS, caseloads or your general SRE is kind of are best positioned. Uh, the, uh, because that’s what they do is they’re focused on, uh, solos.

Aaron Rinehart 01:01:27 They’re focused on uptime, availability, performance, security, right. You’re seeing, you’re seeing really security and SRE is becoming a combined discipline. And that’s been happening over the last few years. I think that’s a trend that continues just like the dev sec ops stuff. Security is usually five years late. A lot of that equation, you know, but SRE and security are perfect match. Um, so SRS, um, your security operations folks are good, but really what I’m really seeing is I’m seeing the, um, architecture folks, security architects, like, uh, usually they’re, um, they’re a lot of them are very competent engineers, especially the software security architecture spaces. They’re the ones that understand they’re usually come from some software, software engineering background. So they kind of understand kind of what we’re doing while we’re doing the basic problem and changing and building and context around security. Uh, and, uh, but what I’m seeing people adopting and making the case, cause cause a lot of times the architects, um, you know, uh, they’re, well-positioned from a organization perspective to communicate to executives why they’re doing something, the value of it, uh, how effective control matters to them, you know? Um, and obviously sometimes they’re involved in cost measures as well. And thinking about that kind of,

Justin Beyer 01:02:38 Yeah. So it’s just there, as you’ve seen it, they’ve been in a position where they’re in a good communication spot with the business compared to some of the other groups within the organization. It’s not that they’re necessarily the best ones to do it. It’s just that that’s generally where it comes out of.

Aaron Rinehart 01:02:54 Well, I would also, I’ll be at one thing to that. It’s like, you know, outside of the role persona, it’s really about like, let the data will speak loud enough if you make, if you, so if you start with a very simple case in your running on your exper, you run it on your environment and then like, you know, you demonstrate that I’ve always found a lot of value in like something built is better than something sad, right? Like, so you build it, you try it, you do it and you see what results you get from it, use the results to communicate what happened like, uh, and I think that also is a great way. I mean, that’s an obvious thing, but I just want to state that, that, like, that’s also a great way to get started in doing it because it will build your, build a momentum. Other people resonate with a problem. And if you’re having that problem, probably everybody else has the same problem.

Justin Beyer 01:03:35 Exactly. Another question that you generally hear, at least on the security space is how does this meet some kind of compliance requirement? Is there any benefit in that space that you see with security, chaos engineering?

Aaron Rinehart 01:03:48 Absolutely. Right. Like, so that was one of the biggest things I brought to the cast engineering space originally. Right? It’s like, I was like, Hey guys, you cast. I was like the blessed one. It was like the black sheep originally with chaos engineering. Like, so I started doing stuff with Jeff Slinger, right. Then I went out to Netflix, Casey invited me off the Netflix. It’s like, Hey, what is this thing that had, and you’re doing the same thing. You’re doing shit for security. And I’m explaining to the next place to get my friend, Jason Chan Netflix, and explain to him like, Hey, this is what chaos engineering does. Is that what you use it for security? I’m at Netflix explaining to the security team kind of how you can use Kissinger for security. And they explaining how a same day to the chaos. It was just hilarious experience.

Aaron Rinehart 01:04:27 But what are the, like, I was just kind of addressing the engineering aspects of it. But like every chaos experiment has compliance value because essentially what you’re doing is you’re proving whether the technology worked the way you thought it did. Right. Or how, how, how you had it documented, right. Whether it’s availability or not our security, like that’s an audible art artifact. Do you have right there? Right? Like if it worked like you don’t have to go run it, run it. All you have to do is map that, that, uh, experiment to a control framework. So if use PCI use NIST, use HIPAA, use high trust, use bad rap, whatever it is, you bap it to like, uh or whatever, whatever control it is, you map it. And then you’ve got a control, uh, that has audit data on a frequent basis, especially where you get to that stage, where you start automating the regression type. Now you constantly got an on-water fact. That’s great for cause because a lot of your auditors out there now are not, not allowing you to have like screenshots and very subjective types of evidence. They want actual instrumentation and data that is not there to fraudulent or manipulate our PRI or manipulated prior to giving it, giving it to them. Does that make sense? Like they want, they want to know that like, Hey, this system is meeting its needs. Exactly.

Justin Beyer 01:05:47 They want to see the evidence that you’re actually doing, the continuous monitoring that you say you’re doing, and that you’re doing your disaster recovery and BCP proofs. Like you say, you are. And that you’re continuously validating that you’re doing all of these things that you say you’re doing. Not just, well, I did a day. I sent you the screenshot.

Aaron Rinehart 01:06:04 Exactly, exactly. And then

Justin Beyer 01:06:06 Just one more thing. Can you give a couple of examples of where you’ve seen successful, you know, security, chaos, engineering, implementation, and an organization. Definitely.

Aaron Rinehart 01:06:17 And so there are a couple of use cases actually in the, in the book we just released one from capital, one, a and one from Cardinal health. Cardinal health is like, you know, it was fun for me as a SSO, starting as a startup, like, you know, actually just prior to starting Baraka, like I had Rob do heart and he’s now Google. Uh, but he, um, he called me up and say, Hey, I was at RSA at the time. He’s like, uh, he wasn’t there to OxyCide, Hey, you know, this whole security cast, how do you do it? Right. Cause I, and then, you know, he ended up building a whole program. They call it applied security at Cardinal health. Jamie Dick ended up hiring Jimmy Dick. And she’s the one who actually built off the program, build off the team that writes all the experiments.

Aaron Rinehart 01:06:55 And you know, she’s been doing a lot of talks off there. So you can go on the internet and check YouTube and check out what, like what she’s, she’s remarkable. She’s, uh, she’s done some really cool things with critically engineering. I think she does a lot of things around containers as well. Uh, also capital one capital, one David Love. So they call it C uh, cyber, chaos engineering. He started with alerts, you know, it makes you sure that their wording, their controls were alerting and that their alerts were, um, effective on the controls that they put in place. That’s kind of where he started. He sort of branched out from there because he actually, he actually started in the cyber test kitchen at capital one and evaluating new technologies. Right. And they were using some breech tax simulation tools for that throwing a tax solutions. But then he’s like, Hey, why am I evaluating all the effectiveness of like new tools?