L. Peter Deutsch of Aladdin Enterprises and formerly of Sun Microsystems joined host Jeff Doolittle to discuss the fallacies of distributed computing. Peter retold the history of the fallacies themselves and corrected some frequent misattributions and misunderstandings regarding their origin. The discussion also addressed interrelationships between the fallacies and changes in the relevance of particular fallacies over the past 30 years. Specific topics referenced during the show include network reliability, network security, message security, and network topology.

This episode sponsored by Elastic and Conf42

Show Notes

Related Links

From the Show

- L Peter Deutsch

- Fallacies of Distributed Computing

- Sun Microsystems

- Xerox XNS Network Protocol

- QUIC protocol

- Butler Lampson

- James Gosling

- Principle of Least Surprise

- GRPC

- Bandwidth and Latency

- Jim Morris

Quotables

- “Single biggest design mistake is to not make an address space big enough” -attributed to Butler Lampson

- “Good open standards for software interfaces and data formats are more important than for software itself to be open source.” -L Peter Deutsch

- “The greater the adherence of a multi-network or inter-network is to standards, the less of an issue homogeneity at the hardware level or the implementation level becomes. The standards make the network not only appear homogeneous, but in operation be sufficiently homogenous.” – L Peter Deutsch

- Morris’ Dictum – “The great thing about standards is that there are so many of them to choose from” – Jim Morris

From IEEE

- Extensions of Network Reliability Analysis

- A Splitting Method for Speedy Composite Network Reliability Evaluation

- Rethinking IoT Network Reliability in the Era of Machine Learning

From SE Radio

- Episode 385: Evan Gilman and Doug Barth on Zero-Trust Networks

- SE-Radio Episode 302: Haroon Meer on Network Security

Transcript

Transcript brought to you by IEEE Software

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected].

SE Radio 00:00:00 This is software engineering radio, the podcast for professional developers on the [email protected]. Se radio is brought to you by the I triple the computer society. I believe software magazine online at computer.org/software. As you radio listeners, we want to hear from you, please visit S e-radio.net/survey. To share a little information about your professional interests and listening habits. It takes less than two minutes to help us continue to make se radio even better. Your responses to the survey are completely confidential. That’s S e-radio.net/survey. Thanks for your support of the show. We look forward to hearing from you soon.

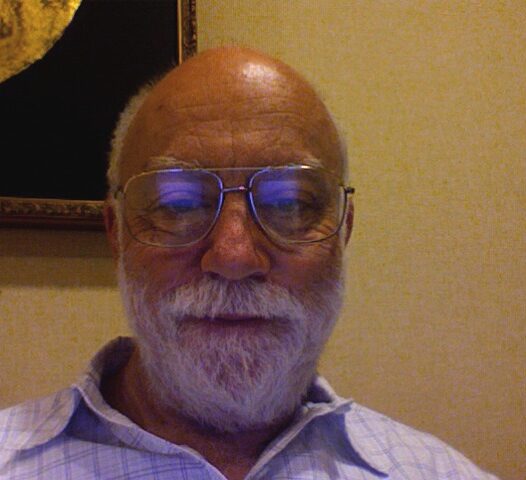

Jeff Doolittle 00:00:46 Welcome to software engineering radio. I’m your host, Jeff Doolittle. I’m excited to invite L Peter Deutsch as our guest on the show today. L Peter Deutsch is best known as the original and primary author of ghost script. The inventor of the term, just in time compilation in connection with his early JIT compiler for smalltalk 80, and one of several independent originators of simultaneous Libris and commercial software licensing. He has also contributed through documentation as writer of the RFCs for deflate, zip and Jesus, and through conference presentations on a variety of topics, including software patents and software security, Peter, welcome to software engineering radio. Thank you.

Peter Deutsch 00:01:30 I’m delighted to be here

Jeff Doolittle 00:01:30 Today. So you mentioned in a previous conversation, we had that you’ve been in the industry for about 60 years, and I imagine you’ve seen a lot in those 60 years, and there’s probably not a lot of people who can claim that they’ve had a 60 year career in the software industry.

Peter Deutsch 00:01:47 And at this point that’s probably right. It wasn’t very customary for someone to start writing software at the age of 14 when I started these days, you know, people start coding in grade school,

Jeff Doolittle 00:01:58 Right? Some do that’s. Yeah, that’s true. In fact, my first programming class was in junior high school on a Commodore 64 back in the eighties. So yeah, definitely started I guess, around the same age as you, I suppose, but maybe a little younger, well, we’re here to talk about today is something that a lot of people probably heard of and how much they thought about them and where they came from and how they are relevant is a great conversation for us to have. So I’m referring to, of course, the fallacies of distributed computing and in the show notes, we’ll put a link into the Wikipedia article on those, which as we might explore during the show may not be entirely accurate. And hopefully it was a result of this show. We can help correct some of the mistakes or errors that might exist in that article, but let’s start with, what’s the background of these fallacies, Peter, how did they come about and sort of what timeframe did these fallacies come into existence?

Peter Deutsch 00:02:53 So my contribution to the fallacies came about during a year and a half that I was working the, what was in sun Microsystems, which was from the latter part of 1991 to the earlier part of 1993. So at least to that extent, the dates in the Wikipedia article are incorrect. I’m not a networking guy. I’ve done very, very little with networking technology, but I think as part of the kind of new hire a hazing process at sun, the first thing that they had me do was co-chair a working group on mobile computing strategy. And my co-chair was a talented engineer named Carolyn Turbie, Phil, and I believe she had primarily a hardware background. So the two of us, you know, set out to talk to the engineers within sun to find out what sung was doing and, you know, get some ideas as to what sunlight do in the future.

Peter Deutsch 00:03:48 And it wasn’t in the course of those investigations and learning about sun’s work and networking that I came up with my contribution to the list of fallacies. When I arrived at some of the first four fallacies were already known in established. And I knew that they’d been originated by one of the two lion brothers. And I didn’t remember whether it was Tom or Dick. And then I added the next four and I gave a presentation at sun during my time there that if I remember correctly had all eight of them in it. So in the course of that investigation at sun, something became pretty clear to me, which was that at the time son had a couple things going for it, but the most important one was that they understood networking protocols and network architecture in a way that their competitors did not. And so, but they were developing, had developed, I guess, this kind of clunky, bulky mobile device, which today, I guess we would call a laptop and it seemed that they wanted some guidance on how to proceed in that arena.

Peter Deutsch 00:05:04 Well, we wrote a report and what we said, I wrote most of the reporters I recall, and what the report said was, look, son does not have a good competitive differentiator in the hardware arena. The Japanese and Korean companies are better set up to move forward. They were already ahead of some at that point, if I remember, right. But the thing that sun does have as a differentiator is engineering depth in networking and network protocols. So sun’s strategic path forward in network to be successful in the mobile space is to develop and offer the non-mobile infrastructure for mobile devices. And we didn’t have the terminology for it then, but in retrospect, what I was basically recommending was that son be a groundbreaker in what today is called cloud computing or it’s server based computing. Well, this was, you know, the early 1990s. And when I’ve been told by someone who was in a position to know that when this report got to the desk of Scott McNealy, who was the CEO of sun at the time that he read the report said poor these bozos and tossed it in the trash and having been in retrospect, right?

Peter Deutsch 00:06:30 It brings me a certain amount of satisfaction.

Jeff Doolittle 00:06:32 And it does, of course make me wonder and possibly our listeners as well, how the world might have been different if the reaction had been different at that time. And I even imagined that many of our listeners don’t even know what sun Microsystems is or was

Peter Deutsch 00:06:46 That’s right. They made some other, I think, perhaps not great strategic choices and wound up being gobbled up and then pretty much dismembered by Oracle.

Jeff Doolittle 00:06:56 And of course, ideas are a dime a dozen and what matters is execution. So even if the ideas had been embraced, it didn’t guarantee success, but it is interesting to think about the possibility that we could have had things differently. You know, the nineties really from my recollection were more of the client server era in many ways, but it sounds like the report was in a way leapfrogging that and saying, Hey, let’s get past that. And let’s actually embrace what, you know what I think we now call network computing or as you said, the cloud.

Peter Deutsch 00:07:26 So, you know, I don’t remember exactly what we said and of course I didn’t take away any copies of it, but I’d like to think that we were maybe a little bit ahead of the time, you know, you’re talking about good ideas, not always coming to fruition. The one that in the networking arena that I think of right away is Xerox and SNS. You know, IPV four was an outgrowth of what was basically the successors to the original ARPANET design and IPV four is it’s a great architecture and it’s served very well, but you know, it it’s run into limitations. It’s run into a capacity limitation, which nobody I think ever would have foreseen. And there are differences of opinion about how good IPV six is. I’m not qualified to weigh in on that. But the thing that I did want to, you know, want to mention is that Xerox actually developed their own competitor.

Peter Deutsch 00:08:21 If you will, to IP, it was called Xans and it greatly predated IPV six. And it was based on a very different principle from IPV four with IPV four, one of the problems. And one of the things that has, you know, made approaching the capacity of the IP address space difficult is that IP addresses combine identification with routing. If you look at the way an IP V4 addresses put together, part of the address basically says, or at least started out saying something about what network that device was attached to. And if networks grow at different rates and you know, if different geographic areas have different needs in terms of their amount of namespace, the IPV four model just isn’t cut out for it. That’s a facet

Jeff Doolittle 00:09:20 And insight, right? Like the typology was coupled to the routing.

Peter Deutsch 00:09:25 Absolutely. I mean, or vice versa, vice versa. Yeah. I’m not the first and I’m sure I’m not the thousandth person who’s made the observation that this had a bad effect on, on the evolution of the network. But, but it’s something that people come to realize. The interesting thing about Xans is that, as I said, it far predates IPV six and it decoupled routing from naming completely devices, pad permanent. If I recall correctly, 48 bit names, what today we know is Mac addresses. They did not have IP addresses in the sense of IPV four. And furthermore, those unique IDs were not necessarily arranged or partitioned in any way that had anything to do with geography. That architecture puts a much greater load on routers because they now have to keep routing information for every device, a full Xans address, consistent of a 48 bit device ID and a 32 bit again, unstructured unique ID for a network.

Peter Deutsch 00:10:35 So the idea was that routers would expect people to be supplying these whatever would be 80 bit addresses and would start out by routing packets to the network specified by the 32 bit address, which might be wrong. It was only supposed to be, I can’t very reliable hint, but if a device moved from one network to another, you know, the 80 bit address would be wrong. The 48 bit address would be fine. And so there was machinery built into the architecture, you know, for recovering from the situation where devices moved between networks and Xerox, didn’t do what they should’ve done, which was to make all the specs public, you know, to encourage implementation and deployment by, you know, everybody on the planet. If I remember right, they tried to hold Xans closer to their chest as a proprietary protocol. And that was a horrendous mistake.

Peter Deutsch 00:11:30 And that is not perhaps the only factor, but certainly one of the factors in Xans not gaining traction. So while this isn’t directly related to the topic of the fallacies of distributed computing, it does touch on something that is related to them, which is the whole issue of the benefit to the developer of a standard that comes from making that standard open because the developer of the standard and the promulgator of the standard, even if they don’t have full control over it are first out of the gate and they have probably a deeper understanding of it than anybody else. Great example of that is Adobe. You know, I mean, I did go script, right? It’s the second implementation of postscript. But as one of my friends at Adobe once said that my business was about as much concern as a competitor to Adobe, as a flying on an elephant because, you know, Adobe has this entire team of developers and deep understanding of all the issues behind raster image processing. And I didn’t, you know, I was a software guy who started out not knowing much of anything about graphics. So, you know, there’s still the flagship and the fact that they made those specs open and in fact, put a good bit of resources into publishing them and making them be really good specs created this tremendous market for postscript and the leader for PDF and a tremendous amount of that expansion accrued to Adobe. If Xerox had understood that phenomenon with respect to SNS, who knows what the networking world would have looked like today.

Jeff Doolittle 00:13:11 Yeah. And that so far is a recurring theme in our conversation, which is, you know, what could have been, had things been different. And I think that’s a great transition. Absolutely. Yeah. And it’s a great transition to the details of the fallacies now, because you know, the approach to these say 25 years ago may be different than what it is now and along the way. And also might’ve been different, had things gone differently in relation to some of the conversations or strategic either lapses or what have you, you know, as it relates to these, these companies, and again, with Xerox, you know, while I’ll put links in the show notes for sun Microsystems and Xerox, for those who don’t know who they are, although I think Xerox is most infamous for the people who might be familiar with Xerox park and the fact that they basically created the graphical user interface, which of course was commandeered borrowed. However you might say by others.

Peter Deutsch 00:14:07 Yes. Well, I was there when pretty much all of that was happening, but that’s a discussion for another day.

Jeff Doolittle 00:14:12 It is well, the fallacies, this is interesting too. We don’t have to go too deep into the who’s and the what’s and the why’s and wherefores, you kind of reviewed it a little bit as we began, but you know, the sort of the original four and then four that you added in your report. And then there’s a ninth that people might see from time to time around the internet though. It doesn’t show up in the Wikipedia article, attributed to James Gosling, who we can also put a link to James in the show notes, but I’m going to review the fallacies real quick. And then what we’ll do is let’s take each one at a time and let’s talk about why it was relevant 25 years ago. And what’s changed in relation to that and either how been addressed or perhaps they’ve become less relevant in the timeframe since you and your colleagues, son first created this list. So the fallacies are, the network is reliable latency. Zero bandwidth is infinite. The network is secure topology doesn’t change. There is one administrator transport cost is zero. The network is homogeneous and finally we all trust each other. So that first fallacy, the network is reliable. That even seems to have some relevance to what you were saying before about Xerox and the X and S protocol. But let’s talk about the Genesis of this idea at sun and how it was relevant back then and how it’s still relevant today.

Peter Deutsch 00:15:39 Well, so I don’t actually know the Genesis of the first four, but what I can say about reliability is if you look at reliability in the context of network systems, which of course I really understand best from the software side, you have reliability enhancers potentially at all levels of what’s called the protocol stack. So, you know, down with the bottom level, you may have checked, know things on the packets that cause the hardware is something very close to the hardware to do re transmits. Then, you know, at the next level up, you have TCP windowing. And then it’s at the level above that at the application level, that unreliability tends not to be addressed now at the time 25 years ago, as I recall, maybe not campus computing, but wide area computing networking was not as reliable as it is today. So it’s a time software correction for outright, you know, transmission errors may have been relevant today.

Peter Deutsch 00:16:49 I think it’s fair to say that it is not that we now have enough experience with error detection and error correction within the kind of the ubiquitous levels of the protocol stack that reliability in terms of data corruption is seen at the software level is just not a factor anymore. However, what is still tremendously relevant is outages is interruptions in service and that is still being handled badly. So air handling on network errors, I think to the extent that even was relevant, it’s not particularly relevant. Now handling timeouts handling failures to transmit is still very important. And software generally deals with that very badly. And I’ll give an example that just came up in the last week. So my husband uses an iPhone and he does a lot of email on the iPhone when he tries to send email from the iPhone. If the transmission fails, number one, he says the iPhone, doesn’t tell you about it. And number two, it doesn’t try to retransmit you have to notice that the message is still in the out cue and tell it to transmit again. So this is terrible, terrible user interface design, it’s terrible architecture and it’s result of a mistaken. Or I was going to say mistaken attitude, but I would say ignorance or lack of foresight about reliability.

Jeff Doolittle 00:18:24 Yeah. And it violates in a way the principle of least surprise, which is that’s not what any normal user would expect an email system to do.

Peter Deutsch 00:18:32 Well, certainly not a normal user. Who’s used to desktop computing, but that’s also another discussion.

Jeff Doolittle 00:18:40 Sure. Well, as far as network liability goes, you’ve mentioned you before the idea of not being addressed at the application level. And it sounds like that’s basically what you’re saying at the transport level, the hardware that is as long as we’re not talking about, you know, full outages, but air correction and things of this nature are in a way we could say solve problems. But then to say, therefore, we don’t need to address it at the application level is just another reflection of this fallacy happening pretty much every day still.

Peter Deutsch 00:19:10 Right. I agree with that. The only kind of distinction that I wanted to make was that dealing with errors at the application level, I think is not really necessary dealing with outages or interruptions at the application level is still extremely relevant. And as kind of a little postscript to that, I should say that one of the things that annoys me the most is that when there is a failure to transmit or, you know, some kind of outage, I’ll see generally speaking that something is not happening, but being able to get any information at all about why or where the outage is, applications a generally don’t share that. And B the levels of the stack underneath them may not be set up to provide that information. So that is an oversight unreliability in the lower levels of the stack, if that is in fact, the case,

Jeff Doolittle 00:20:06 That makes sense. And I see attempts to try to resolve this a bit more explicitly with new protocols, such as GRPC, which, you know, flat out has a deadline within the payloads that you send by default. And so there’s an example of making timeouts front and center. It’s like, you should expect this thing to be either processed by a deadline. And if not, you should be ready to handle the fact that it wasn’t processed as opposed to HTTP, which is much more loose in that regard.

Peter Deutsch 00:20:33 It is. But, you know, you mentioned timeouts. So something that I’ve been having an ongoing wrangle with my broadband provider about we’re out in the country and we have a wireless broadband, and it is extremely annoyingly unreliable. And even when I call up their tech support, sometimes I can’t get any information from them about where they think the problem is, and it’s not clear that they even have that information. So for example, something that I believe they don’t have is I don’t believe they have good hop by hop outage logs, if they did, they’d be able to say, oh yeah, you know, the problem is in our connection to the backbone. You know, the problem is in this intermediate link between where you are at our hub, the problem is between our antenna and your point of entry, the problem is beyond your point of entry, that’s something they ought to be able to tell me they ought to have that information. And they don’t. So it’s not just a matter of problem recovery. I think this particular fallacy also leads to inadequate tools for problem diagnosis and communication.

Jeff Doolittle 00:21:49 Absolutely. And of course you call me that who has we’re in the middle of recording a podcast from hundreds of miles of distance from each other, that your network is not reliable. So hopefully the fallacy will not bite us as we’re hearing

Peter Deutsch 00:22:01 Well, as it happens, I have an office that’s not at my home and it has very, very reliable DSL broadband. And that’s where I am right now.

Jeff Doolittle 00:22:10 Well, that’s good to know. So, but back home in the country, I imagine not only is the network is reliable, one fallacy that you clearly live in, breathe on a daily basis, but that leads very well into the second fallacy, which is people build applications and systems. Assuming that latency is zero,

Peter Deutsch 00:22:29 Right? So this is something that’s actually come up in my own interactive work when I’m here at the office and interacting with a server back at the house. One of the failure modes of the wireless broadband is not a complete loss of connectivity, but is multiple retries. That may cause things as simple as, you know, as character echoes to get delayed by many seconds. And when that happens, sometimes one isn’t sure whether something actually happened or not. And, you know, you spoke about timeouts timeouts, aren’t a bad idea, but for an application to be able to tell you whether something is in the process of happening or not would be, I think, really valuable to users. And so there really two aspects of latency, and one of them actually relates directly to the next fallacy on the list. There can be latency caused by, you know, the latency in the transport of any individual packet, but there can also be response latency that’s caused by application developers, having unreasonable expectations, or simply not having thought about the amount of data that has to be transported for given interaction.

Peter Deutsch 00:23:48 So, you know, because the broadband bandwidth has been steadily increasing, this is less important than it has been in the past, but even today, the difference in responsiveness between graphics heavy web pages and more, I should say information oriented webpages, the difference in responsiveness can be quite noticeable to the user. You know, if the user was on a, you know, a hundred megabit LAN, you know, they might not see any difference at all, but if, you know, if you’re on the other side of a broadband connection, who’s, you know, who’s been with is only a few hundred kilobits a second, then they are going to see that. And I’m in the process of developing actually my first web application. And that actually is an issue because the server for that application is on the other side of that wireless connection. And that wireless connection in one direction is typically only about 500 kilobits a second. So if you have a webpage, that’s, you know, 20 kilobytes, 20 kilobytes is what 160 kilobits. So just transmitting that page takes a third of a second. And while that is an artifact of bandwidth and not of latency at the network level, it shows up as latency at the user level. And so the two are really connected.

Jeff Doolittle 00:25:15 Absolutely. And when you consider that most web applications are loading more than one resource at a time, then that’s going to lead to cascading effects. And, you know, you often run into those situations where a team says, you know, or people ask, why is the web app slow? And you just got to open up your developer tools and you see the chattiness of all of these individual interactions. And they just can compound,

Peter Deutsch 00:25:37 In fact, a change that I actually made in my application partway through development, it used to store the common JavaScript code, the CSS and the main page on three separate webpages. And the browser had to make three separate requests for them. And simply sending all the data in one request actually made a noticeable improvement in the responsiveness of the application. So that kind of thing

Jeff Doolittle 00:26:06 What’s funny is we may not even have to worry about that as much in the near future, because HTTP two, and then a quick protocol, which I’ll reference in the show notes, you know, these protocols are coming out that can actually allow multiple requests to be fulfilled along the same connection. Whereas, you know, what we’ve had since the beginning of the internet is more of this, you know, per resource requires its own connection. And, and there’s not this ability to sort of in-line these requests. So some of that is going to be hopefully somewhat resolved, but it sounds like you’re not so sure.

Peter Deutsch 00:26:38 Well, the thing is that what you just described just doable now, because HTTP is a connectional protocol. So if the browser and the application of the browser are multithreaded, the browser can issue multiple HTTP requests and have them basically, you know, be a convoy going through the network in one direction and having the responses come back in a convoy and the other direction. And I suspect that part of the reason that doesn’t happen is that the rules for sequentiality versus parallelism in loading multiple components of a webpage are perhaps not thought about as often or as carefully as they could be. And of course, if a page in turn has references to another page that has to be done sequentially, because you can’t make the second request middle of the first page until the first one has been satisfied. So what you’re just describing undoubtedly will make the situation better and will make it easier to do well. But I think at this point, the issue is not so much that you couldn’t QA HTTP requests, but as that it is awkward to do within the current technologies.

Jeff Doolittle 00:27:51 And that’s it, that’s a good thing to point out. I think, you know, a lot of it is how the browsers have implemented things has an impact in most browsers, have some kind of a limit on your connections, which is kind to the server because, you know, if a browser could have a thousand connections, you know, you’re, you’re gonna have dos attacks basically just by regular usage of your, of your system. So there’s, there’s some throttling and things of that nature that, that help. So I noticed something is what you were saying. There is the interrelationship between these fallacies as well. They can’t really be taken necessarily independently as you were just demonstrating here. That latency is zero and bandwidth is infinite. There’s some interplay between how those fallacies behave in the real.

Peter Deutsch 00:28:34 And there are other connections on this list. So for example, I mean, we’re not there yet, but the connection between number four, the network is secure and number nine, we all trust each other. There connections between those two and their connections between number five, the typology doesn’t change. And number six, there is one administrator. Those are also connected. So, you know, we’ll get there in due course.

SE Radio 00:28:59 The last thing enables the world’s leading organizations to put their data, to work using the power of search, whether it’s connecting people on teams with content that matters keeping applications and infrastructure online or protecting entire digital ecosystems elastic search platform is able to surface relevant results with speed and add scale, learn how you can get started with elastic search platform for free at elastic.co/se radio.

Jeff Doolittle 00:29:28 Sure. And that’s a great opportunity to transition to number four, which is the network is secure. And as we’re diving into that, it, again, it occurs to me as well. That it’s interesting how many of these fallacies, they seem pertain greatly to the applications that use the network, not necessarily just to the network itself, and maybe that’s something that people haven’t recognized before is there about networking, but it sounds like in some ways the fallacies are more about the expectations we have of the network and how we use the network, because in some ways the network, you know, the networks we have have resolved a lot of these problems as you pointed out before at the transport layer, but at our peril, we ignore them at the application level.

Peter Deutsch 00:30:12 Absolutely. And in fact, for number four, which we’re just about to talk about the network is secure. There’s a saying in the security community, which, you know, I’m not the security guy, but it’s kind of stuck with me, which is that in a layered system, security can be lost at any level. So the situation with network security is actually kind of interesting because the other thing to go along with what you just said about the fact that these issues are not just about the network infrastructure, but about the application is as well. There’s a third thing that they were about, which is the environment of use in the early 1990s. Networking was very new and an awful lot of the networking that was happening was happening within technology organizations, within research centers, probably within the military. I wouldn’t know. And where, for example, you know, people started out with an experience of latency being essentially zero because practically all of their networking was on campus or in your organization networking.

Peter Deutsch 00:31:19 And now, well, it’s kind of interesting. We went through an era in which bandwidth was a big problem because consumers, you know, end users, non-technical users were piling into the network world faster than the telecoms or whoever could build the capacity. And now the pendulum has to some extent swung back the other way. You know, it’s now pretty routine, you know, to do consumer level real-time or near real-time video conferencing. So bandwidth isn’t infinite, but the importance of that particular fallacy, you know, went way up for a while and has now gone back down again, somewhat. Whereas for number four, the network being secure, the importance of that issue has just continued to go up. Because first of all, you know, first we went from a situation where almost all the networking was done within, you know, fairly well-established trust boundaries, to a situation where, you know, all of a sudden we had these, all these consumers piling onto the network. And then we’re now in a situation where number one, because of malware, the security of the end users is much more difficult and more complicated than it was 20 years ago. And I have a whole wrap on malware, which is also for another day and also with the use of networking, including broadband networking within the government and within higher security environments, the incentive for bad players to attack networking has increased greatly. So the level of sophistication of security challenges and security threats has also risen dramatically compared with 30 years ago,

Jeff Doolittle 00:33:12 Which is a great point. The more people are on the network. Then the more incentive there is for bad actors to try to

Peter Deutsch 00:33:19 The more people there on the network, and more specifically the greater, the value of the information being transmitted and the greater, the value of that information not being revealed, the greater, the incentive. Now, you know, on the flip side, the situation has improved it hasn’t, I believe improved commensurate to the rise in threats, but for example, you know, the fact that HTTPS and SMTP over TLS, the fact that at least hop to hop encryption is now becoming kind of the, the norm that is certainly an improvement in a big class of security situations.

Jeff Doolittle 00:34:02 Sure. And that’s hop to hop now, where would you see possibly there being other areas where maybe it hasn’t been addressed?

Peter Deutsch 00:34:09 Well, so let me give you an example. If you look at email end-to-end email security is a known, I wouldn’t go so far as to say it’s a solved problem, but there are usable technologies for end to end email encryption. And there are products out there that do it. I think the signal does it, I think telegram does it. I think there are a couple of others, but in an example of something that causes problems too, I think perhaps in all of these areas, Google will not do it because their business model depends on their being able to read people’s emails. And similarly, I think I have no data to back this up and I’m not generally a conspiracy theorist, but I wouldn’t be surprised if there had been some behind the scenes discouragement, let’s say, from the NSA, which had the effect of end-to-end encryption not being built in from the start in the email applications offered by, for example, Microsoft or the apple.

Jeff Doolittle 00:35:18 Yeah. Which is so interesting because, and again, I, and I agree with you, it doesn’t really take conspiracy theories to explain a lot of things. It just takes following the incentives. And of course there are people who would have an incentive to do what you just described. And yet ironically, it leaves those same entities prone to attacks themselves. So it’s sort of, it’s sort of a doubly bad impact.

Peter Deutsch 00:35:39 That is true. Although you do have to distinguish a little bit between the entities being vulnerable and the entities services or customers being vulnerable,

Jeff Doolittle 00:35:47 You know, that’s true too. Right.

Peter Deutsch 00:35:49 So, I mean, I’m sure that, well, I’m not sure. I wouldn’t be surprised for example, if Google used more secure email storage and transport within the organization than they support for their customers, but that’s pure speculation.

Jeff Doolittle 00:36:05 And again, but it would be the incentives would probably bear that out, whether it’s true or not, but it seems like it’s plausible at least based on the incentives.

Peter Deutsch 00:36:13 Sure. So at any rate, so that’s kind of the capsule summary, which is that security is now much more on people’s radar hop to hop encryption is becoming used widely. A lot of the security issues now are ones that are not in the network per se, they’re issues of fishing and they’re issues of malware penetration of the end users.

Jeff Doolittle 00:36:37 Absolutely. And I also think of other things such as MTLS, which is mutual TLS and zero trust networking are becoming more common. But again, even if you’re dealing with these things at the network layer, that doesn’t mean that the payloads that are going over that network are secure. And that’s a good point you bring up there is, you know, the network is secure. Isn’t just about the transmission. It’s also about the payload within the transmission. And if we assume that that’s secure because the network is secure, assuming the network actually is secure, we still have problems that are being left on address there such as email, not being secure.

Peter Deutsch 00:37:13 I mean, the payload may be secure in the sense that you can have a very, very high level of confidence that it hasn’t been corrupted in transit. The issue of what that payload does when it arrives. That is exactly part of the larger issue of network use. And that has people don’t I believe have a good handle around.

Jeff Doolittle 00:37:31 Yeah. Yeah. Don’t spend enough time thinking about it and designing systems to deal with it. Well, we’ve gone through the four original fallacies from sun that you certainly included in your report, but now we’re about to move into the four that you contributed in your time at sun. And this fifth one is near and dear to my heart as a software architect, because I think a lot of times typology is left undiscussed or not really considered appropriately. And a lot of architectures are tightly and it’s in a similar way that we described before about X and SD coupling the routing from the network. And I think this is a pretty common problem generally with the assumption that the topology doesn’t change. And it’s also, we, based on that, we make assumptions about our application architectures that couple us to typology.

Peter Deutsch 00:38:21 So one of the things that has definitely changed tremendously in the last 30 years is the fact that so much of network usage is now based on mobile devices. So networking has had to, to deal with a situation where the topology, at least at the edge is changing constantly. And, you know, new protocols have evolved for that, but they are at least to my eye as a non networking guy to my eye, they seem a little Jerry rigged. So if you want to do TCP IP networking, and you have a device that’s moving from one network to another, you have to have protocols, you know, for, to change its IP addresses, it’s moving. And once you have that, you know, once you recognize that that’s happening, then you have on top of that, the difficulty of maintaining sessions, you know, if you’re talking on a cell phone on a long distance trip, long distance road trip for train trip, your cell phone is going to be handed off from one tower to another, as you travel.

Peter Deutsch 00:39:28 And the protocols for dealing with that constant change and connectivity have to take that into account. And as I said before, you know, IPV four has required, you know, this extra layer on top of it that doesn’t play with applications very well if the application, well, it may not play with applications or operating systems as well as a location, independent addressing scheme would. But so that’s, I mean, that’s really all that I, I would have to say about that. The Genesis, by the way of this was that while I was at sun, I was hearing constant, you know, mutterings and gray things, both from users and to a lesser extent from network administrators that, oh, you know, so-and-so moved his office from here to there and that put him on a different network and yeah, yeah, yeah. You know, that problem just, you know, doesn’t have to exist if you have the right addressing architecture down at the bottom level,

Jeff Doolittle 00:40:26 But we don’t, we

Peter Deutsch 00:40:27 Don’t. So that problem is going to be with us in IPV six, you know, to be perfectly honest, I don’t remember what they did about that. It’ll expose my ignorance to say that. I don’t remember whether IPV six uses location, independent IDs or not, or whether it’s still combines routing with addressing,

Jeff Doolittle 00:40:46 I’ll have to plead ignorance. There’s somewhat as well. I know because of the length of the address space, one of the intentions from my understanding was to enable more IOT or internet of things, type scenarios, although as you’re describing the SNS protocol, it sounds like once again, Xerox was ahead of their time, but the execution was left to be done by someone else.

Peter Deutsch 00:41:06 Yeah. So, yeah, that’s right. I mean, my old colleague Butler Lampson, who, by the way, originally gave me the idea for just-in-time compilation. And I don’t think has been adequately credited for it once said the single worst mistake that you can make in the design of a system is not to make an address space big enough.

Jeff Doolittle 00:41:26 We might need to add that to the list of the hardest things in computer science, which are right. It’s what is it? It’s things, cash and validation. Those are the two, and then it’s off by one problems. But now there’s a fourth, which is failing to make a large enough address place.

Peter Deutsch 00:41:43 The Butler published a great paper that talked about cashing and cash and validation also.

Jeff Doolittle 00:41:48 Oh, that’s great. That’s great. I will look for that and try to put that in the show notes

SE Radio 00:41:55 As you radio listeners, we want to hear from you please visit sc-radio.net/survey to share a little information about your professional interests and listening habits. It takes less than two minutes to help us continue to make se radio even better responses to the survey are completely confidential. That’s S e-radio.net/survey. Thanks for your support of the show. We look forward to hearing from you soon,

Peter Deutsch 00:42:23 The issue of changing typology is also coupled with the next issue of administration, you know, even at sun, which theoretically should have had a single administrator for all the network connectivity issues in the company, they sort of couldn’t because, you know, people moved offices and moved devices and those things, you know, really should be handled at the localized level. There shouldn’t have to be an administrator to deal with that, but that’s only administration as it relates to topology. Now, administration, as it relates to policy, you know, which is on the Wikipedia page is a different issue. And I think that what is one of the things that has become pretty clear in these 30 years in networking and which I think generally has been handled well is the realization that the potential of networking and internetworking can only be realized if there are well-established standards that people actually implement.

Peter Deutsch 00:43:26 And of course, every company, every company has business incentives to add their own bells and whistles that only work within the company, you know, and that’s, you know, that will always happen, but what has made the internet as successful as it has, as it has been, is the fact that it has done as good a job as it has being standards-based. So the relationship of that with this fallacy here is to say that the best administration, at least of some things is administration that doesn’t need an administrator. It is administration on the basis of standards.

Jeff Doolittle 00:44:13 Yeah. That’s a great

Peter Deutsch 00:44:13 Point. I’m an extremely strong believer in good open standards. And I think, I mean, I’m also a great supporter of open source software, but I believe that open standards for both software and data formats are more important than the software itself. Opensource

Jeff Doolittle 00:44:33 Say that again?

Peter Deutsch 00:44:34 I believe that good open standards, both for software interfaces and for data formats are more important than for software itself to be open source.

Jeff Doolittle 00:44:47 I think that’s a great insight. Yeah, absolutely. And I think that’s a great point. You mentioned interfaces and formats and maybe some clarification there, but when you mean interface and you could basically say in a sense, it’s the API, I’m in a web API, but it’s, it could be, but it’s, what’s exposed how you interact with this thing. And then the data flows from it.

Peter Deutsch 00:45:06 Right? So my interfaces for software, I really did mean API. And, you know, for networks, of course, it’s the protocols, but this also applies to the data formats, too many applications, our data jails, you use them to create, you know, data of great value to you, but you were then locked to that application to be able to share the data, convert the data extract from the data, you know, do operations on that data. I won’t go into this, you know, at tremendous length, but my other careers is, is as a musician, as a composer and the two leading commercial musical score, editing programs, a finale and Sibelius both deliberately not only refuse to publish the formats of their data, but at least Sabellius actually goes to some trouble to encrypt it. So they do not want any third party to be able to read the data, read the information that you created using their application. And I think this is well we’re on the air. So I’ll just say, I think this is very unfortunate.

Jeff Doolittle 00:46:22 It is unfortunate. It sounds like, you know, Microsoft office before they changed it to an open standard. And then that changed a lot of times and it didn’t seem to hurt Microsoft to make this not that, and I’m not commenting on how good the standard is. I’m just saying

Peter Deutsch 00:46:36 It didn’t hurt Microsoft, but something that you should know is that there’s a difference between making the form of the standard open and making the semantics of the standard open. Because even with doc X format, even with the XML based format, being able to render a Microsoft word document accurately, when I say accurately render it the same way as Microsoft software requires knowing a tremendous amount about quirks and peculiarities of Microsoft’s implementation. And that’s why I said before, that’s, what’s important is not only that the standard be open, but that the players at the implementers recognize that it’s to their greatest advantage to implement it faithfully. Well, this is all gone a little, a little far afield, but sure. It has

Jeff Doolittle 00:47:23 As back to the point about administration. I think that the idea that standards are actually, they are a form of administration just like the consumer market as a form of regulation, in a sense. And so if you can get sort of inbuilt administration without an administrator tour, then you can have significant benefits from doing so

Peter Deutsch 00:47:44 You can, and that leads into a whole fascinating discussion of political philosophy. And, um, I’m going to have to stop myself from going there,

Jeff Doolittle 00:47:52 The different podcasts, different episode. But from that, let’s move on to number seven. And intriguingly, to me, as I look at seven, which is transport cost is zero. In some ways it relates to number three, which is bandwidth is infinite. There’s a relationship there.

Peter Deutsch 00:48:08 Yeah. So I’m trying to remember what was going through my mind when I added that one to the list. You know, I don’t know that I can really add a whole lot to that because I don’t honestly remember exactly what’s the charging model for networking was like 30 years ago. And one of the things that is quite striking is that today the transport costs at least as seen by users is generally zero for user.

Jeff Doolittle 00:48:34 Yes it is. But ironically, the relevance of this I think has greatly grown for software engineers because very often they will design a system. And I’ve seen this multiple times in my career without taking into account the transport costs. And then sometimes depending on the nature of the data and the usage metrics and things of this nature that suddenly you discover your transport cost is not zero and it can bite you

Peter Deutsch 00:49:00 Well. So let me push on that just a little bit. And if you look at a company that’s offering some kind of service through the web, let’s say their primary usage based cost is going to be server capacity. And it’s my impression that for, you know, for high bandwidth network connections or whatever they’re called, I was under the impression that those are flat rate. So within a fairly broad range, the transport costs, it’s not zero, but it’s usage insensitive. Now, you know, as your traffic builds up, you know, then they’re going to be times when you have to, you know, go to higher tiers of bandwidth. So my impression is that it’s not exactly transport costs that you’re seeing, but bandwidth or capacity costs

Jeff Doolittle 00:49:54 And that’s possible. Yeah. And that’s fair, you know, if you’re crossing clouds, for example, there’s ways that you can try to reduce that by setting up, you know, mutual VPNs and things of this nature. But I think it’s in, you know, to your point, you mentioned server capacity, which, you know, compute and storage would be the primary elements of that. I still think not considering the transport costs in relation to bandwidth and things is something we still need to be careful of. And I obviously could be wrong about this, but even if it’s not the biggest issue that we face, it seems to me, in some ways it’s in ways more of an issue now maybe than it was 30 years ago. In other words, it was prescient of you to see this then when the pricing models were different than they are now.

Peter Deutsch 00:50:36 Interesting. Well, okay, this may be a blind spot on my part, cause I don’t really have as much of anything to add

Jeff Doolittle 00:50:43 As well. So let’s move on to number eight, right. Which, you know, somewhat has to do with the apology. Intriguingly, you know, five and eight. I think there’s a bit been a relationship there.

Peter Deutsch 00:50:51 It has something to do with typology, but I think it has a lot more to do with the point that we were talking about earlier, which you know, is not really exactly a fallacy on the list, which is the issue of standards because the adherence of a multi network or an internetwork is to standards. The less of an issue homogeneity at the hardware level or at the implementation level becomes

Jeff Doolittle 00:51:17 Because the standards allow you to,

Peter Deutsch 00:51:20 Because of the standards, make the network not only appear homogenous, but in operation be sufficiently homogenous,

Jeff Doolittle 00:51:32 Right. And even if there’s multiple standards, as long as they’re adopted appropriately and consistently, it’s still a form of homogeneity. It’s just maybe more of a composed homogeneity rather than like a dictated top-down homogeneity.

Peter Deutsch 00:51:48 Perhaps one of my former colleagues, Jim Morris, who was a great source of rise sayings once said, and I like this so much that I call it more dictum. That the great thing about standards is that there is so many of them to choose from.

Jeff Doolittle 00:52:07 Yes, yes. And that is a problem, of course.

Peter Deutsch 00:52:10 But the thing is that yes, I mean, heterogeneity is an issue. And the thing is that I can’t say I have, I have a lot of insight into what this observation translates into when you’re developing well, in my case, networking software, the thing is you can only go so far because one of the big issues in heterogeneous environments is that the very semantic model of what it is that’s being represented may be different. I don’t know enough about network technology in detail, to give you an example, but I have a good one from an arena that I’m more familiar with, which is musical score representations. So for example, one musical score representation may say, okay, you know, you have multiple staffs and you have notes on each staff, right? There’s a different score representation that may say, okay, you have multiple staffs, but you may have more than one voice on each staff.

Peter Deutsch 00:53:14 And so now, you know, notes get linked together because they’re all part of the same voice. And then that representation, it may even have the concept of a voice going from one staff to another back again, you see this sometimes in, in piano music where you have what amounts to a single voice. And it has to transition from one hand to the other. You can’t do automatic conversion between those two formats because one of them has information in it that the other one just doesn’t. And you know, if I had time to think, I could probably come up with some examples of this in the networking world. So from what I can see, the best approach to heterogeneity in the networking world has simply been to get rid of it by a, you know, a community-based process that develops standards that everyone is willing to sign up to.

Peter Deutsch 00:54:04 I think there is more awareness of this as an issue than there was 30 years ago, because 30 years ago, networks and networking hardware and software, weren’t kind of, you know, butting up against each other the way they do today. And I also think that, as I said before, I think the industry hasn’t done that bad, a job of actually carrying it out. And I think that makes sense. I’ll give you an example, HTML five, there’s some things about HTML five. I really don’t like, but it is a part of networking technology. In my opinion, even though, you know, it isn’t part of transport where the industry has done a creditable job of moving a standard forward to include new areas of application and new opportunities.

Jeff Doolittle 00:54:53 I think of the power grid and the mistake that someone could make of assuming that the power network is homogeneous and failing to recognize that, you know, some power grids are on 50 Hertz, some are on 60 Hertz. And if you don’t take that into account, you’re going to have problems. And that’s one of many examples of, if you assume the power grid is homogeneous across the world, you’d have issues and there are ways to resolve it.

Peter Deutsch 00:55:17 Yeah. But that’s a great example. And it does kind of tie back to what I said a moment ago, which is that if you kind of know what the space of heterogeneous alternatives is, then, you know, not keeping them in mind is definitely a fallacy of development. It’s when, you know, when you have spheres of influence or spheres of deployment that come in contact with each other for the first time, and you have entities that have may have started out from quite different assumptions that now have to be able to talk to each other. That’s where the problems really come up. That makes sense. There are tons of good science fiction stories about this.

Jeff Doolittle 00:55:56 Absolutely. Well, and even the power grid, you know, what I just described is basically two different standards, right? Not to completely, you know, wild west, we’re doing every we want, and now we’re trying to interface. That would be the network it’s homogeneous at its worst. If you, you know, assuming you believe that fallacy,

Peter Deutsch 00:56:12 But the power grid is a good example. There’s 50 Hertz versus 60 Hertz. There’s a one 10 versus two 20. So yeah,

Jeff Doolittle 00:56:20 Absolutely. Well, and it used to be, you had to check with to make sure what you were getting out of the wall was what you expected for a particular device. And we’ve resolved, we’ve resolved that problem. And in a similar way, a lot of these things have been resolved with the way that the network, the global network works.

Peter Deutsch 00:56:34 Yes. So yeah, I mean, these issues are all still there. And as we’ve seen, you know, going through them, some of them have been resolved better than others and you know, some of them still aren’t sufficiently on people’s radar, but, you know, with the additional 30 years of experience with small scale and then large scale networking, I’m temperamentally a pessimist. No, really I am. But with respect to the issues that we’ve, you know, that we’ve just been over here, you know, I have, you know, pretty sort of cautiously optimistic outlook.

Jeff Doolittle 00:57:06 Well, you might’ve said that until we get to number nine. I don’t know. Maybe you can tell me, but number nine is not originally part of the eight fallacies, but you have mentioned to me previously that it does belong on the list and we’re not sure of the Genesis possibly James Gosling, but let’s talk about number nine and perhaps the source of it and why you think it belongs on the list.

Peter Deutsch 00:57:27 Well, so it’s really an expansion of number four and it extends beyond the boundaries of the physical network, the issue of security. And, you know, it’s stated in kind of an informal way, if I were going to restate it, I would say something like the communicating parties trust each other, or the party that you’re communicating with is trustworthy. Yeah. I think, I think that would be the way I would say it, the party that you’re communicating with is trustworthy.

Jeff Doolittle 00:57:58 Okay. And assuming that they are, is the root of this fallacy

Peter Deutsch 00:58:02 Is a fallacy because I mean, again, this is a much, much larger philosophical question of, you know, what’s an appropriate level of trust. What are appropriate kinds of trust? What are appropriate trust guaranty mechanisms. These are, you know, age old questions of human relations. And there are also age old questions of business, relationships of contract of law. You know, they come up over and over again in the context of networking. I alluded earlier to what I think are the two big edge security problems that I don’t think we have a very good handle on yet. You know, one of them is fishing and the other one is end user malware. And I believe that there are known deployable, proven technological approaches that would tremendously reduce the risk of end-user malware. I don’t think those approaches are being deployed because they would have a very high commercial cost. And what we’re seeing instead is bizarre and Byzantine attempts to mitigate them for goodness sake at the CPU level, there’s junk. That’s been slathered into the more recent Intel CPU designs specifically to try to do certain kinds of sandboxing and bad code detection at the CPU level. And I think that this is, you know, horrendously misguided, but I mean, those are the two sources that I see of end to end trust issues. Namely whether the other end is either deliberately and knowingly or inadvertently and ignorantly being malicious

SE Radio 00:59:52 Call 42 is a new series of must-watch tech conferences. It’s online and free to attend with hybrid events coming next year, come 42 conferences cover topics like cloud SRE, chaos engineering, machine learning, and quantum computing, as well as programming languages, including Python, Golang, rust, and JavaScript register for [email protected] slash se radio that’s C O N F number four, number two.com/s E radio. See you there for the ultimate answers.

Jeff Doolittle 01:00:24 At the end of the day, from a networking standpoint, the solutions might be quite similar, but from a human standpoint, obviously intentionality plays a big role in all of this. And I love the point you made about, you know, so much of this has to do it relates to so much more about things like political philosophy and human systems of communication and connection. And really at the end of the day, the network is about us. It’s about people and how we communicate and how we interconnect with each other.

Peter Deutsch 01:00:53 It is, and that’s been so much more true in the COVID era when so much more human interaction is being network mediated.

Jeff Doolittle 01:01:00 Absolutely. And, you know, Hey, the network certainly has improved in 30 years, because I think for those of us who are very technologically savvy and even those who might be less so COVID would have been quite different 30 years ago than it was in 2020. And we can attribute a lot of that to the technological expansion and improvements that we’ve had over the last decades.

Peter Deutsch 01:01:21 Yeah. That’s a great point. Yeah. I mean, you know, to think about what it must have been like in the great pandemic a hundred years ago, that was in the late 19 teens, the telephone was a fairly new technology radio wasn’t even widespread.

Jeff Doolittle 01:01:37 Yes. Even electricity was not universal universal point. Well, Peter, this has been a fascinating conversation and I’m so thankful that you’ve joined me on the show. If people want to find out more about what you’re up to, where would you recommend that they go

Peter Deutsch 01:01:54 Well? So what I’m up to these days is I’m basically not doing software technology anymore. I’m a composer. So if you want to see what I’m up to go to www.lpd.org. Okay.

Jeff Doolittle 01:02:08 L P D got org, but you did now true confession though. You did tell me, you’re writing your first web applications. So there’s some software still going on. Oh,

Peter Deutsch 01:02:19 I do all kinds of software, but you know, I think I might’ve said this to you when we spoke earlier, you know, how a lot of people, mostly men, you know, like to tinker with old cars and old motorcycles, it’s sort of like that with me in software, you know, it’s in my, it’s in my blood. I love doing this stuff. I’m just not like doing it in public. This application that I’m building, it’s not a commercial application. It’s something to help our household manage the plethora of tasks and projects that we seem to be engaged in. And, you know, it might be good enough to be a commercial project someday. But as might’ve said earlier, it’s built on an architecture that doesn’t scale up and that has other limitations. And you know, some of them relate to these fallacies. I mean, it relies on it on a low-latency network. And I know that, but the thing is, I know that I knew that going in and I said, okay, you know, you have to understand what problem you’re trying to solve. The problem I’m trying to solve is local to a household. It doesn’t have to deal with some of these issues.

Jeff Doolittle 01:03:24 Oh, your problem. Know your use case. And don’t forget the fallacies of distributed computing while you’re at it. Absolutely. Don’t need to boil the ocean and build a cloud architecture just to manage your household.

Peter Deutsch 01:03:36 That’s true. So thank you very much for having an invited me. This has been a lot of,

Jeff Doolittle 01:03:42 For me too, as well. Yeah. Thanks so much for being here. This is Jeff. Do little for software engineering radio. Thanks for listening.

SE Radio 01:03:51 Thanks for listening to se radio an educational program brought to you by either your software magazine or more about the podcast, including other episodes, visit our [email protected] to provide feedback. You can comment on each episode on the website or reach us on LinkedIn, Facebook, Twitter, or through our slack [email protected]. You can also email [email protected], this and all other episodes of se radio is licensed under creative commons license 2.5. Thanks for listening.

[End of Audio]

SE Radio theme: “Broken Reality” by Kevin MacLeod (incompetech.com — Licensed under Creative Commons: By Attribution 3.0)

[How about signing in with my IEEE ID?]

This was probably my favorite SE Radio show so far. Really great insights into the things that matter to everyone.