James Smith of Bugsnag discusses Software bugs and quality. Host Priyanka Raghavan spoke with him on topics ranging from causes of bugs to types of bugs, history of bugs, and user experience and environments causing different bugs. The show ends with parting advice on how to handle bugs in terms of setting up measurements and benchmarks, measuring those, and then deciding a course of action to fix based on data.

This episode sponsored by BMC Software.

Show Notes

Related Links

- Technical debt quadrant by Martin Fowler

- 4 Worst bugs in history

- Predictive analysis of bugs

- Gerald Weinberg on Bugs and software quality

- Matt Lacey on Mobile usability

- Loopj

Transcript

Transcript brought to you by IEEE Software

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected].

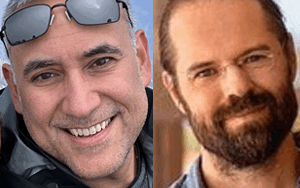

Priyanka Raghavan 00:00:48 This is Priyanka Raghavan for software engineering radio. And today we are going to be talking about software bugs and why it’s okay to ship your software with bugs. And my guest for today is James Smith, who is the CEO and co-founder of Bugsnag. And James is an entrepreneur. He’s also a software engineer, and he has a broad base of experience. And he’s very passionate about building great products, growing teams, and scaling infrastructure with data. And he’s also developed many open source projects like LoopJ, which is used by companies such as Twitter, Pinterest, and Trello. So welcome to the show, James.

James Smith Thank you for having me Priyanka.

Priyanka Raghavan So let’s jump right in. We want to talk about, you know, bugs. So in our industry, as you know, we’ve moved from many different software processes, like, you know, going from, you know, back in the day of waterfall to now where we are with agile, and then we have all these sort of, you know, continuous integration processes. We have DevOps, DevSecOps, biz DevOps — all of these things with the intent of reducing bugs before you ship the software. So we’re curious to know why you think it’s okay to ship software with bugs.

James Smith Yeah. So I think to be clear, I think it’s a really, really good idea to reduce bugs before you ship your software as much as possible, especially when it comes to best practices, like, just adding linting and having CICD run your tests and builds every single time. Right. But, the reality is that it’s a trade off. If you want to be competitive, delivering your features or your product to your customer base, you have to figure out well, would it be more valuable to deliver those features to my customers quicker? And so one part of this question is will, reducing the time that we spend on the human powered QA or test coverage or code coverage, get us to market quicker. But in reality, I actually think that the big reason why it’s okay to have bugs slipped through to production is because there’s really nothing you can do about it. And so even if you have the absolute best, most locked down a hundred percent code coverage linting and you’ve run your test every time you write type of character or the keyboard in reality, you cannot test every single experience that your customers are going to have, right? This is particularly true in the client side, mobile browser environments, where, you know, there’s, there’s tens of thousands of different Android phones on the market, and you’re not going to be able to run your software on every single device with every piece of data coming from every flow in your software. So it’s more of a reality. I think that you can reduce the egregious bugs in the worst case bugs, but some will absolutely slip through. And then sometimes it’s okay. Sometimes it’s okay to have bugs slipped through to production so that you can get that feature out to market quicker. One really good example of that, if I remember rightly when a few years ago, Lyft and Uber were both working on their shared rides experience – so, before this launched, you had to ride on your own in a Lyft or an Uber. And it used to be called, was Uber pool and Lyft liner it used to be called. And, it turns out that they were both working on it in parallel. And, Uber launched theirs before Lyft launched theirs and then Lyft went out and launched it the day after or within a week of Uber having their feature launched. And Uber got all the press, Uber got all of the excitement about it, all the buzz about it. but Lyft were like, dammit, we, we were ready. We were right there. And we’ve been building it, you know, different features, different experiences, but, you know, sometimes it’s okay to, to trade these things off. Right.

Priyanka Raghavan 00:04:39 I mean yeah, because you have to get to market quicker. So, yeah. okay, so that’s so, so in, so you’re saying it’s good to do a lot of this, you know, static code analysis, unit testing, whatever your early load and securitizing, all that is good, but, you know, you can’t be perfect. is that, would that be right if I were to sum it up?

James Smith 00:05:00 That’s right. Yeah. And I also I’m oversimplifying here because there are environments, like if you’re building a space shuttle or a medical software, you know, situations where you’re going to get a big trouble, people might die or, or billions of dollars might get lost. If you, if you don’t catch that bug, you’ve want to be a lot more careful about listen, take a different approach. But if you’re doing web software that you’re delivering on a regular basis, you can hot fix and patch, right. Or even apps delivered on a phone or desktop that you can update, you know? Yes. You can be a little bit more, you can, you can get away with a lot more.

Priyanka Raghavan 00:05:33 Okay. But like I was curious in your experience, you know, there are these certain types of bugs which had sort of, you know, you know, primarily come from, you know, say developer being little lazy and, you know, you’re not doing enough checking and those kinds of things. What are your, you know, what’s your take on that? I mean, I guess a lot of these kinds of, you know, early testing helps with that.

James Smith 00:05:54 Yeah. It really depends on the language that you’re working with. It’s actually a really big variant on this, between languages, there’s modern languages, like, like Swift that, that try to force you to not use, uninitialized variables. And this is a really common cause of problems in, in other languages like JavaScript or Ruby even, or, or, or Java. And I think that I would argue that it’s probably not, I know what you mean though. It’s not developer laziness. I think it’s more lack of care or lack of understanding. I think that you’ll see that the junior developers, the people who’ve been in their career for only a couple of years are going to be the ones racking up the no points or exceptions and, uninitialized variables. Right. but there are ways around this. And I feel like the engineering organizations, it’s the job of the engineering managers and the leaders on the team to set up the, the ID rules and the linting rules and the, and the checks to make sure that those, those things get caught.

James Smith 00:06:53 I think most people listening will, will have done this exact same thing before. And that is sometimes you want to just get the thing to work, because you’re like, right. If I just put connect this last piece of the puzzle together, I can see it doing the thing that I want it to do. And sometimes along that journey, you want to collect all the pipes together, and you’re not going to put in all of the perfect exception handling the weird edge cases. And so that’s fine. As long as you come back in and clean it up and make sure that you handle these things and yeah, some, some languages handle this much better than others. There’s a whole wave of modern languages that are trying to make language features that stop bad behavior from developers. But even outside of that, I think in ID land, you know, you can write Java code in, in notepad if you want, but most Java developers are using some kind of IDE. And when you’re using an IDE and you’re calling a function that will throw an exception, it’s gonna generate that code to make sure that you’re capturing handling that exception correctly. And so most ideas have features baked in to say, Hey, heads up, don’t forget you need to do this. So you can, I think it’s lack of experience, lack of care, maybe getting excited about delivering these features to your, to your customers or seeing it work yourself. Right. But there are definitely ways around it.

Priyanka Raghavan 00:08:11 So which, actually, since we’re talking about languages, is there, like, do you think there’s, you know, do you see more bugs in certain languages?

James Smith 00:08:22 Oh yeah. Wait, we haven’t, you know what, we should do a report on this. Now, we have plenty of data on this. We should definitely run a report on this, but I’ll just tell you off the top of my head, JavaScript a hundred percent and there’s no surprises there. JavaScript is it’s so easy to use. There’s a lot of, it’s a lot of people’s first language it’s a lot of junior developers will pick it up. There’s been a lot of buzz and excitement around it on full stack. And so, as I said earlier, the kind of more junior developers are going to have more bugs, but also the language features the, seemed like a idea at the time. I think that the world is moving away. Oh, by the way, I love JavaScript. I think it’s a great language, bugs snakes, front-end is actually moving from JavaScript to TypeScript, which is in my mind, or just a way to make JavaScript more sensible. It’s still JavaScript, but all of the dirty things that you can do with JavaScript, get people in trouble, big time, just even like equivalence relationships and quality relationships and costing magic costing it’s happening. And I think that it’s important for people to understand the fundamentals of typing, even in a language like JavaScript where it’s, you know, magically typed behind the scenes. But yeah, it can get you in a lot of trouble.

Priyanka Raghavan 00:09:35 There’s also this question that, you know, I sort of have to ask, you know, leading up to this is, since you talked about Lexi, now you talked about JavaScript, bring something, looking at your data, that it looks like something that, you know, produce a lot of bugs. Can I extend this question and say, is there a class of bugs coming out of a certain type of, you know, architectural something that causes, more issues like, you know, certain things are like, Oh no, there are no bugs in say, I don’t know this, if you’re writing, I dunno, like some IOT software, I don’t think there’s really any sort of bugs coming in that it’s not as susceptible to see a web based development. Do you see things like that? Is there some sort of analysis that, that really helps? Like you start a project and you already know, okay, this is, you know, if I’m choosing this particular architecture and this particular design pattern, I, for a fact know that I’m going to have mobile.

James Smith 00:10:24 Yeah. I feel like that there’s no, I don’t have any data on this, but from my experiences, I can say that the, the smaller, the scope of your project and the better the contracts between your project and other projects, the, the, the, the less likely it is to have complicated, confusing bugs. And so, you know, this has kind of been really highlighted by the rise of microservices architectures, right? You’re saying this app does one thing and it owns this data. It does this one thing really, really well. And so when you’re saying, this is the one thing that this, this service does, you can anticipate all of the problems that could arise a lot easier because you’re not trying to map a whole complex state machine in your head or whatever. And so I’m oversimplifying this, but if you, if the more you go towards microservice land with contracts, between services and applications, I think the more it forces you to document things and think about the relationship between these applications and think about errors that are going to occur.

James Smith 00:11:21 That could cause that contract to break down with other services. The con the contrary to that, I think that interactive user interfaces tend to be the most likely to have a lot of bugs in, right, because you’re building something that is going to be interacted with by people in a lot of different ways than maybe a lot of ways that you don’t anticipate. And also there’s a ton of asynchronous code running, a lot of your code. Most of your code in a UI application, a web app or desktop app or mobile app is running and callbacks. You’re waiting for someone to, with your application and callbacks can get gnarly. Sometimes you can, maybe you were expecting some data to be present. That’s not present yet. At the time someone clicked a button or maybe your callback. JavaScript’s really funny because if there’s an exception in a callback, it doesn’t kill execution for the rest of that vacation. It just causes that callback to fail. So from your customer’s experience, the whole application keeps working, but just your callback, you’ll click handler might break. And I’ve seen this in, you know, on a, on a news website or something like that, and try to click through to the next article. And I’m like, why is nothing happening? So, yeah, I think, I think it’s about a well-defined, concepts of ownership that, that I’ve seen.

Priyanka Raghavan 00:12:38 Yeah. That’s like, yeah, good design, would really have to have a good bounded context. And so yeah, you can reduce bugs. Yeah. That makes a lot of sense, I guess. So also in terms of, you know, our sort of whole UI development, there are a lot of, you know, third-party off the shelf competence that we use, right? Like, you know, any of the new JavaScript frameworks or whatever, like we have TypeScript. And of course we also have like these different, you know, angular view, frontend, things with a lot of, you know, third-party libraries now what happens to, you know, bugs coming from a third party libraries. Now that’s something that oftentimes of course you do do, you know, you could check for vulnerabilities and things like that, you know, in terms of security, but, how do you handle those kinds of bugs? Any, you know, like the third party that you’re, depending on

James Smith 00:13:26 It’s, it’s difficult because I mean, the good news is that most people are using open source third-party libraries these days. So you’re not like when I started my career, that wasn’t the case. You, you, you paid Oracle for some SDK and you’d never be able to see the source code for that. And these days, most providers are making their, their third party code, source. And so it’s annoying because you shouldn’t have to deal with bugs in third party code, but the reality is you will. And if it’s open source, at least it gives you the ability to go and dig into it. And so if you’re using a, an Arab, reporting and an error monitoring solution, like bug snag, it will show you the stack trace. It will show you the line of code, that caused the crash. And it’ll show you all of the other code paths that that customer went through, up until the point of the crash. And so in that case, you can see, hang on a second, this went through this weird file in reacts, that I need to dig into, or if the top of the stack trace was in the react app, you’re like, wait a minute. It should not be crashing inside of the react library. But I think that to avoid that you don’t lead life on the bleeding edge, try to try to, it’s very exciting to get those hot new features out of your library, but don’t, don’t immediately bump your dependencies as soon as, you know, some beta is out for a new version of react or whatever it is. I also think that actually selection of third party libraries is a really underrated part of software development. I think sometimes people are like, I need a, I don’t know, what’s a good example, MD five. I need to generate an MD five hash. So I’m just going to go on node and NPM and search for and then maybe I’ll pick this one, but in reality, you know, I won’t pick this one because this uses the new promise interface and therefore that’s really cool. Well, is it maintained? How many stars does it have an on get hub, all pull requests and comments being addressed and issues being addressed on hub. Like it’s actually, I think people jump to, Oh, someone’s already written this code. Let me use it just like some developers would jump to like Microsoft included jumps to stack overflow and, and have a look at code snippet. But in reality, if you’re going to be relying on something, you need to really trust it. And so doing that research on those third party libraries, and this is super critical and very underrated. And my co-founder says this all the time. He’ll, he’ll say he looks for a safe pair of hands, and in a third party, SDK land safe pair of hands might mean the one that’s been around for three, four years, rather than the one that’s just been launched. It’s growing very quickly. We can refactor it, news that other library, if we want in future, if it becomes more stable, but right now, who knows if it’s even going to exist in a year’s time. So I agree with that approach as well.

Priyanka Raghavan 00:16:11 I think that that’s, I think what you’ve said is really hit the nail on my, on my head. In fact, in fact, I think I also sort of just jump in, pick up the very first library. So, I think that really is quite underrated as you see. So now that we looked at like, you know, sort of major causes of bugs and then we’ve compartmentalized it also in the sense, like we’ve got third-party bugs and then, you know, because of, you know, maybe somebody new to a particular language causes, you know, errors, things like that. And also we’ve also looked at bugs maybe because of, you know, unrealistic, deadline. We just want to get straight out into the market. And, and so we’ve got a whole, you know, sort of class of bugs that we’ve talked about right now. Let’s just switch gears a bit because I found this really interesting article on your blog about these worst bugs in history, right. And we’ll have a link in our show notes for our listeners, but would you be able to, you know, sort of give us an example of, something that you’ve researched that you think is like, Oh my God, I really need to talk about this in the show. Do you have anything like that late an example that just pops up,

James Smith 00:17:18 Oh, the one, the worst, the worst one in my mind is, is the therapy 25 disaster. And this is actually I had an, I did a math and computer science degree. And one of the, the, the courses I did, in computer science was safety, critical systems. And they start off not by talking about how to work on and build safety, critical designs. They start off by telling horror stories, and this was one of the horror stories. And so they’re at 25 was this story and about a radiation therapy machine that was designed to treat cancer patients. So it was designed to help people. And obviously it’s not just a, a piece of machinery, it had software in it. And for whatever reason, there weren’t enough safety features in, in the software. And it led to three people, unfortunately dying. And so software by guy actually caused people to die. And it’s kind of terrifying because they were thinking about safety criticality while building the software. But this was in the eighties and in the eighties, this wasn’t a super well understood space. They probably weren’t teaching safety, critical systems in colleges back in the eighties. And it’s a, it’s a really weird bug as well because it wasn’t a pure software bug. It was a multi-layered bug. So they kind of rolled out a new version of this machine. And as far as I understand it in the new version of the machine, they removed a hardware safety feature, and it was intended that that hardware safety feature would be replaced by a software safety feature. So it’s not like that there was a crash or a line of code that had the wrong variable in it or whatever. It was a design bug in the difference between the hardware team and the software team’s understanding of how this should work. Obviously the, this led to the tragedy of people dying, the machines were recalled by the FDA, but also looking at the other side of things. This has led to a lot of changes in the way that we develop software. And I don’t, maybe I wouldn’t have had a safety critical course at college. If it wasn’t for situations like this, I actually ended up after college working in as a software consultant. And one of the projects I worked on was a high volume medical pump it’s for patients that had AIDS. And it was a high, high volume doses of, of fluid. And it’s incredibly dangerous to get that wrong if you deliver too much of the drug that’s over, for, for patients potentially. And the system that was designed that I was working on, actually it was a small box about, about the size of a lunchbox.

James Smith 00:20:11 It had three CPU. Then I add a UI view, which is the one I was working on as a graduate software engineer. And so the worst case scenario, and that is that it could show up, something wrong with the UI, which is still really bad if you’re saying that this much fluid had been delivered, but actually under the hood, less had been delivered or more that’s a mismatch, but the other two CPU’s with different layers of safety criticality. And so the, super gray beard experts, men and women working on the project, for the most safety critical CPU. But I had to go multiple audits, independent, audit, an external audit. And so there’s so many layers, these days of safety and as graduate software engineer, who’d only ever worked on like web projects before then I was like, wow, this is very slow to do anything on this. We have to send it out for review. And then, then you read about and learn about things like that. There are 25 and you’re like, okay, this is why we do this very important to get it right. So, yeah, it’s a horrible story, but I feel like that, I feel like there was no, there was no malice. It wasn’t someone trying to do anything wrong. It was just a design problem. And I think lots of safety, critical systems have learned from that experience since then.

Priyanka Raghavan 00:21:24 Right. And design of course, plays again, plays a really important part. And also, I guess the one that you brought up reviews as well, right? Yeah. Yeah. I think reviews also make up sort of, they all probably underrated as well in reducing bugs. I guess we can switch gears to something a little bit lighter after that example, I guess, for our listeners, like in the microservices world, which is what we’re all doing right now, interesting to also talk about the Facebook API, but the tiered written about, could you tell us about how that unfolded and you know, how many clients it affected? And if I, if I remember right, it was something simple, like, the return type from the end point.

James Smith 00:22:05 Exactly. Right. Yeah. Yeah. Okay. This is one of these things. And I, you know, I understand that this is my company would not exist if bugs didn’t happen, right. Bugs happen. it’s important. It’s important to deal with them, but like, I’m not sure if everyone’s familiar with this, but if you are a mobile developer, you probably know about this a few months ago, pretty much every application that used the Facebook SDK, which is a re, which is most consumer mobile applications. It turns out, started crashing. Some of them crashed on boots, which meant when you went to open your Spotify application, you couldn’t open it. It would just crash immediately on boot. And this is on iOS. And this, you, you asked earlier about a third-party SDKs crushing. This is exactly what happened. And so anyone who had embedded the Facebook, SDK, what was happening is the Facebook SDK powers, things like Facebook authentication, but it also powers things like a partner, partner, data sharing and things like that.

James Smith 00:23:06 Seeing which friends are, listening to certain tracks and features in, in, in various pieces of software. Anyway, the Facebook SDK goes off and fetches data from Facebook’s API. It grabs it in Jason format that Jason payload comes back to the SDK and then some cocoa iOS code unpacks that Jason and tries to do something with it. Now it’s a pretty common workflow. Probably everyone who’s listening has done something like that. Now, obviously, if the data that comes back is not in the format that you expect, you should protect against that. In fact, that’s exactly what you asked about the first question predictions. What are the common bugs not protecting against unexpected situations? That’s exactly right. And in this Facebook, SDK, what looks like happened was the either intentionally or unintentionally, the team that powered the API that was returning that Jason payload changed the structure of the data in that payload.

James Smith 00:24:04 And okay, fine. That happens, right. That’s going to cause a bug if you change the structure, that data without having the new format supported. But in this case, it wasn’t just the bug where some information was missing. It caused a crash. So the cocoa code was expecting to dig into a particular variable with a particular structure. So it was doing operations on a string that it expected to be an array or a list I think was the way round. It worked. And so unprotected access of the wrong data type because the whole piece of code to crash and in a cocoa application, in, in most applications that then caused the entire application to crash because it was an unhandled exception that bubbled out from the SDK and then was not caught by, the, application code. And so what a lot of the time bugs are introduced when you change code.

James Smith 00:24:56 So if you roll out a new release or a new version, you’re going to be looking very carefully to see if the new crash is introduced, but if you are Spotify and it’s three in the morning and, Facebook pushes out a new change to that API, you do not expect a hundred percent of your sessions to fail on iOS, which is what happened. And so it’s a nightmare situation. And really what the impact of this was huge. It was pretty much, most con most major consumer mobile applications on iOS, had blips of outages for a number of hours. Now that the impact was also kind of insane for a product like bug stack. And so our product detects bugs crashes and exceptions in software and people’s software, and then gives you the data you need to figure out what’s going on and how to fix it so effectively.

James Smith 00:25:46 And we have a lot of customers that are in the mobile space, especially in the consumer mobile space. We got a ton, tens, hundreds, millions of exceptions, being reported to our servers. So we’re, again, we’re just all servers auto scale and our systems auto scale. So it wasn’t a problem, but suddenly my infrastructure team saw this big spike, all of these consumer mobile applications, iOS, sending exceptions. And in fact, one of the leading exception handling providers, error monitoring providers for mobile Google Google’s offering could not cope with the volume. And in fact, basically shut down their servers for a while, or at least stopped processing a lot of the data. So because effectively it’s like, you’re getting a denial of service, right? You’ve got all these clients around the world, sending in all of this data at a much higher volume than you expect.

James Smith 00:26:36 So it was, that was, that was bad, but obviously bugs happen. And what we say, what we said, bug snag a lot is, you know, don’t anger, the oxcarts. So if, if, some, your competitor or someone, you know about software goes down, never go to about that. Right. Never, ever gloats about that and be like, Ooh, I wish I’m glad it wasn’t asked, but like, because if you angle the obstacles that will come back to you next week as well. But the thing that really bugs me about this was a few weeks later. Yeah. The exact same bug happened. So they changed again, the data format again, and the exact same book happens. So look, make a mistake, learn from the mistake. Yeah, I think it was, was it, Facebook, it used to say move fast and break things was their motto. and it’s kind of, kind of fine learn from these mistakes though. And the second time it happened, was there no change control there who allowed that API change to happen? That’s the thing that I think annoyed a lot, a lot of developers because they’re like, Oh, they didn’t even have time between the first incident and the second incident to put in more protective code paths to stop this from happening and SDKs and blowing up their app.

Priyanka Raghavan 00:27:52 Right. So, so actually it gives a lot also, I wonder about things like, you know, API stability that, you know, usually have rules for that rate. I mean, I guess there’s only a period of time before you tell your clients say we’re changing something, that’s going to really break things.

James Smith 00:28:06 It must’ve been unintentional. I think that, I think it was a private API, which, which maybe they were less careful about, but it must’ve been unintentional. I’m sure. But yeah, it’s, you know, what’s interesting is that, and again, I don’t know, you never know what goes on inside of a company when there’s an outage going on. Our customers knew about this outage seemingly hours, or at least, at least 20 minutes before Facebook did, because one of the things our service does, it will tell you what percentage of users and what percentage of application sessions are healthy or not. And so when you’re like 99% healthy, and then somebody goes to 0% of healthy, it’s very straightforward to see that in, in a product like bug snag. but yeah, all the get hub issue, then people went to get hub issues for the Facebook STK and started saying, Hey, this is down and engineers were responding with, Oh, really? I don’t let me look into this for you knows what was happening, but maybe they were scrambling to get it fixed. They probably were. They probably have really good monitoring in place, but one time okay. Second time.

Priyanka Raghavan 00:29:15 Yeah. Right, right. And I guess it also brings out how important it is for, I guess, teams to also protect against their third party SDKs. Like you say, I think that’s, again, one area where you really need to focus on how you, you know, how we, our application reacts to that

Speaker 0 00:29:34 Become an autonomous digital enterprise and your business can achieve a game status, intelligent, predictive systems, effortless automation and technology, and people working in harmony. This is the autonomous digital enterprise visit bmc.com/a game for more information.

Priyanka Raghavan 00:29:58 Okay. So that’s like some, I guess we’ve got, you know, sort of a look at how bad bugs could be, you know, if they happen, like, you know, you’ve got the really serious ones that you talked about, which could be life-threatening. And then of course, you’ve also got like, you know, your day to day thing, like your favorite app, doesn’t open up because of a crash, like what we just discussed, I guess now sort of logically, I’d like to move a little bit into another area of, you know, software quality. so there was a show in, 2017, with AC radio. I think it was episode two 80 with Gerald Weinberg and he had a book, I think, bugs, booboo and blunders. And when he was actually talking to the show, one thing that really struck me as a listener was, I think the anecdote that he was giving was, he was at, you know, a car, you know, a car manufacturing place where there was some sort, sorry, an auto show, I think.

Priyanka Raghavan 00:30:51 So it was an auto show and there was this new version of the car, which he was looking at. And it was great, like when he was looking at the brochures and stuff, but then actually when he went into the car and started driving it, that’s when he really felt the car and, you know, found out things about it. And the analogy was the same thing with software, you know, that you actually, you know, really know the major issues in your software only when it’s in the hands of a real user. Yeah. So in a way I want to know, are there sort of know class of bugs that can only be found by actually users in the field?

James Smith 00:31:25 yeah. I feel like the, I kind of touched on this earlier with the fragmentation of devices. That’s one that is particularly painful. I remember when I started my career, I like to think of, yeah. The, the phases of, of, of bug remediation being, before then, hands of your customers and after, and it used to be this big phase in between where, it was human powered QA. So when I started my career, huge QA teams would work for sometimes months or QA in your, going through a QA script and QA and your software. And the more that we’ve got to lean agile, rapid iteration, and, you know, being able to hot fix and patch things and componentizing your software, we can ship faster. And so that doesn’t work anymore. You cannot have kind of a team of humans do two months of QA, right?

James Smith 00:32:13 So from the left-hand side of software development, that’s kind of been replaced with this. I think it’s a capital one who has seen quality engineering, which I really love as a term, but it’s basically saying, look, let’s not have people going through word document scripts and clicking around on the application and typing in word characters, let’s try and automate that as much as possible. And then from the right-hand side, you have data-driven instrumentation with products like bug stack that will tell you, yes, this a problem. This is how many customers is affected. This is how you go and fix it. But what’s funny is, and I always say, I said this earlier that we, if everyone, if you could get that perfect vision from the left-hand side and get everything fixed, we, our company wouldn’t exist and our business wouldn’t exist. So thankfully bugs exist in the hands of customers.

James Smith 00:32:56 But yeah, I think that the ones that ended up happening in the hands of customers tend to be where the data representing that user has got into a strange state. So I think typically when you’re, when you think about pre production and pre customer testing, you’ve got this like unit tests and integration tests, you’ve got these like clean room environments. You start from the memory of the application being completely blank. Then you start up the application, then you click around and do X, Y, and Z things. And in reality, customers have quite a dirty environment that they’re running in it because you need to do things like safe state and make sure that you’re caching things and making sure that you’re authenticating the customer. And so you don’t start off in this clean room environment that you do in integration and unit tests most of the time.

James Smith 00:33:48 So I think the, most of the bugs that I see that happen are not due to necessarily code paths being missed because in theory, you should have good code coverage and you’re testing, right? It’s about weird data structures and weird unclean data coming through. I mean, we had a, one of my previous companies, we had a bug that was caused by, a corrupted cookie format, in a migration between two types of authentication token, there was a bug in that migration and it caused certain, a large percentage of customers to have a bad authentication token. And when the application was trying to read in that data, it would just kind of blow up. Cause it would, it was expecting that it could be to be called off or something like that. And it was corrupted. And so you’re not gonna think about that when that’s a really, that’s a really good, that’s a really good, another example that bad data and caching data that has gone into a layered state, but also, I think, anything where you’re migrating data as well, because Mike it’s the same problem, but migrating data, when you’re running your tests, you expect the inputs to look like a certain way, but if the inputs, Oh, look different, hopefully your migration will fail cleanly.

James Smith 00:35:05 But if it doesn’t, it’s going to migrate bad data into worst data. And so that’s, I think the I’ve said the word data about a million times there, but I think that’s, that’s what gets the problems that happen in the hands of your customers is where you’re not operating that clean room environment and there’s caching and authentication and cookies and local storage and all sorts of stuff that’s stored on the device. And it is probably not in the format you expect, but there’s other things as well, which I think I mentioned device fragmentation. One of the, one of the funnest examples of that is I forget which vendor it was. So I won’t name and shame, but, a mobile Android, phone manufacturer. One thing that a lot of people don’t know about Android is that, yes, there’s this beautiful, AOSP Android open source project, but every single vendor forks it and messes around with it.

James Smith 00:35:55 And you see that from the user interfaces, but actually that forking and messing around with the code happens at much lower levels and a major mobile phone vendor, in their modification of, of the hundred open-source project modified Jason pausing. And again, we’re back to Jason again, just because everyone’s using that as an interchange format, but they, they literally modified the J you know, in Java, you’ve got what a job would, YouTube or Jason or whatever it is for modifying and reading and writing. Jason they’ve modified that. So if you were expecting it in your test environments, it’s a D serialized Jason in a particular way, in one rare case, it would not de serialize data in the way that every other Android device in the world would. And so look, you can have, maybe you got lucky and had like, let’s say it was HTC, right? Maybe you had an HTC phone and you would have caught it, or you run a device farm and you tested it. But if it didn’t, then you have to wait for your customers to tell you that, because it only happened in that particular environment. So yeah, it’s, it’s, it’s the wild West, especially on client side, you don’t know what’s happening with the environments. You do not control the environment.

Priyanka Raghavan 00:37:06 Right. You can’t really control, but that also brings me up to the question, like, you know, I guess it is more like, I mean, it’s just your thoughts on it. not to put you on the spot, but is it okay to delegate fighting bugs to our clients who paid money plus software, right.

Speaker 3 00:37:23 People, people,

James Smith 00:37:25 I don’t think it’s okay. I think there’s a phrase that people use a lot and I really hate it, which is testing production. I don’t know if you’ve heard people talking about testing production and embracing test in production. I think that’s the wrong way of thinking about things. I think that either you are very intentional about trade-offs to mean that maybe bugs will happen, that you have to be like, well, there’s sometimes bugs are going to happen, but we want to use tooling and technology to remediate them as quick as possible. And so, yeah, I remember when I talked about QA earlier, when I started my career, the QA engineers, the QA team at one of my companies I worked at would go through scripts and they would do things that, you know, that you’d fail the QA scripts. If some, you know, if you, if your username was 20,000 characters long or something ridiculous.

James Smith 00:38:19 And you’re like these days, I’m like, who cares? Like if a customer is typing in a 20,000 character username, are they really a customer that we care about that much? Like it doesn’t really matter. And it’s, it’s it’s so it’s such a brutal way of describing it. But I think that QA ranks mostly, maybe you have a P one P two P three primary system, but it’s very belonged about how it categorizes and prioritizes box. If you are measuring at the bare minimif you’re aggregating bugs by root cause then you can at least stay this bug is affecting a hundred people and say, Oh, we’ve got to fix that straight away. So I see this as a way to better allocate your resources effectively. So if you’ve got a small engineering team, use that information to quickly get in and fix that bug and which books matter the most varies by by companies.

James Smith 00:39:12 So I’ve given a really basic example of how many times it’s happened, but, the way that we’ve built bug snag, we allow you to attach metadata diagnostic data to any crash report. And some key use cases for that are look, show me bugs that were affecting key customers. People who are spending more than a million dollars a year with us, if you’re a B2B, you’re a big company, or show me bugs that happening in a really important flow like login or payments flows or whatever they are. Because if you do have those scarce resources, like every engineering team development team does, you probably want to fix the bugs that matter the most first. And maybe if people are typing in 20,000 character usernames and a settings page, you can leave that one. You can put that in a, in the, in the backlog, in JIRA for a while.

Priyanka Raghavan 00:40:00 Okay. Okay. Interesting. Yeah, it makes perfect sense. You will rather, you know, focus your energy on the pink customers as well. Yeah. Like the big clients, the big ticket. Yeah, yeah.

James Smith 00:40:13 All the, all the, the highest pain as well. Like you are a, if you’re a consumer mobile application that doesn’t have, like people spending a lot of money, they probably want to focus your time on the bugs that are affecting the highest volume of customers as well, whatever metric you want to use in your software, just be thoughtful about it and use data to drive it. And then I think you can deliver software. That’s just as stable as if you did a two month QA process and in fact, improve it quicker and get features to market quicker. Right.

Priyanka Raghavan 00:40:42 Okay. I guess I’d like to revisit that a little bit later in the show. but you know, I have this question on sort about, you know, we talked a little bit about, you know, you just briefly touched, you talked about this, you know, resources, that apps use, for example, if you’re having mobile apps, there are, so we had an episode on, mobile usability, a few weeks back with Matt Lizzie and he had this, you know, he talked about six principles of usability and one of the principles was like resources and how apps use resources. And, he said there are certain apps which actually lose out, you know, because the way the managers was is for example, you’re using something in your phone really heats up or, you know, batteries like, you know, discharging me crazy. Do you have any, like, I’m just curious, do you have any examples of where you might’ve seen, you know, because of this kind of problem with the apps were not really good in actually managing their resources on the mobile phone, then they actually lost out, you know, to the market share and then people just started to uninstalling the doubt, but yeah. You know, I’m not going to use it. Yeah.

James Smith 00:41:50 Th there’s a, there’s a classic example of this. It’s a K a class of examples, I think. But, before I, before I go into that, I think there’s something interesting in, in the web services world, people talk about reliability and you’ll hear like job titles, like site, reliability, engineering, reliability. If you go and read it, I love it. The Google SRE book is great. I actually think it’s very well written. If you look at what the definition typically of reliability is, it’s kind of three layers. And I think of it as like a pyramid, right? So availability all my servers and services, even running stability, which is mainly what bugs snake focuses on and then performance on the top. Right? And if any of these are, are under, not working correctly and not hitting your SLS, that you’ve defined, you’re going to deliver a poor customer experience.

James Smith 00:42:42 And the one you’re talking about here, I think is mostly on the performance side of things effectively, right. But actually a lot of the times these performance issues do end to end up in stability issues. So, so this is really classic example. And I think that there’s plenty of great party open source libraries now to help you not make this mistake. But when I first started off in my mobile development career, when mobile development wasn’t really was brand new on smartphones, at least yeah. Almost every app that was being built. If it had content in it, you would do the infinite scroll model, right? So you’d load some data from an API and then show it on the screen. And then as the user kept on scrolling. So imagine Instagram, you’re scrolling through your pictures on Instagram. Now, one of the classic problems that happen in these infinite content, infinite scroll environments was you, you’re great at pulling in data from the API.

James Smith 00:43:32 You’re great at allocating memory for that. Or maybe Java does it for you automatically or whatever. Yeah. You’re great at rendering on the screen, but what happens when it goes off the screen? And so there were so many apps in early and early mobile development that didn’t think about the resource allocation of off-screen items and offscreen things on, on, on the menu. And this is true actually with web apps as well, with things like react. You want to make sure that you’re rendering things that are in the viewport, but anyway, on mobile, if you’re, if you’re Instagram and you’re downloading images that, you know, half a megabyte to four megabytes in size, and you’re on a mobile device that had hardly any Ram, they didn’t need to really be good at this. And so you ended up having this, anyone who had an Android phone in 2009 or 2010, would be able to really understand what I’m talking about here.

James Smith 00:44:24 Android used to feel like you were going at three frames per second, right. It used to feel like you in a, a stop motion movie. And it was because the core operating system wasn’t super performant for things like scrolling and they thankfully fixed that. But also because there were no really clean patterns or libraries or SDKs for helping you manage resources when they went off screen. And so, yeah, it’s, I I’m having flashbacks a little bit to that because it was so awful. It was not the experience that you wanted on a, on a buttery, smooth mobile, like you had an iPhone and an Android next to each other. And one of them was beautiful, buttery, smooth experience. And the other one was a jerky experience that ended up crashing because you had an out of memory error when all of these images were in memory.

Priyanka Raghavan 00:45:11 Right, right. Yeah. so I guess so, so now that we’ve sort of looked at all of this, I think, you know, towards the end, like we’re sort of going towards like, you know, my final part of the show and, I guess, I want to spend some time on how we should deal with a buggy software. So I guess you, you have like, sort of touched upon it through our talk, but if I were to like, sort of summarize it, one of the things that, you know, we’re talking about is like, for example, we talked to him about this Google SRE book. There’s this thing that they have that arrow budget rate where they say that if you don’t hit that arrow budget, then you know, maybe you shouldn’t be spending so much time on all of these different testing and things that you’re doing.

Priyanka Raghavan 00:45:54 And then, you know, work on features. Should, should teams be actually doing that or, or that’s number one. The second thing is, should we actually, if that is what we chose, should we like sort of double down on our QA effort, if you have an arrow budget and the third thing is, do you just like, like you say, in the beginning, just, you know, live with bugs and maybe just have a sort of, you know, triage it, drank it. And then based on the ranking, you try to fix based on whatever criteria you’re that you’ve chosen. What are the, in your mind like the top couple of things that teams should be doing or companies should be doing actually.

James Smith 00:46:33 Yeah. I think actually your flow that you’ve laid out there is a really sensible framework for this. I think that you need to, you need to measure, right. you need to then benchmark and have rules of engagement for what happens if you’re not hitting your SLS. I suppose I’ll talk more about that in a second. And then you need to have the, the, the, the, the process and the people need to buy into the process as well. So let’s start right back at the measuring point of view. So, as you said, in, in web services, web applications in SRE land, era budgets, which typically means, you know, the number of 500 errors or five Oh X errors that you’re seeing, is a really good metric of that. Now what we’ve done at bug snag is we’ve extended that concept to any application. So we have this concept of what we call a stability score.

James Smith 00:47:16 And so if once you’ve installed bugs, I can drop in, I SDK will detect any on how to acception, signal out of memory, error, Android, not responding, application, freeze, whatever it is, anything that causes your session, your customer to end in a failed session, we’ll detect that scenario. And then, because we know how many sessions that were we’ve counted the sessions as well. We’ve got a fraction we can say, you know, out of it’s like an error budget, same thing out of, a hundred sessions, 99% of them were problem-free. And so out of that, you get a nice percentage. You say, okay, 99% of my sessions where we’ll do it, my stability score is 99%, but you can’t just start that. And you can’t just have, have that be like, okay, great. You need to do something about it if it changes.

James Smith 00:48:02 So the next thing I think you need to do is decide on what an acceptable range of, stability scores is, what our budgets are. And we take the approach and bugs, like same as in the Sr Google SRE book of allowing our customers to select not just a one goal, one target, but actually two, we have an SLA goal and an SLO goal SLA goals. The service level agreement goal is, look, if your stability score drops below this number, then treat the welders on fire. You’d stop everything, you drop everything you’re doing, and you come and get that stability, up to the area. It needs to be, for example, if Facebook’s, API returns the wrong data format, you need to figure out how to get your application brooding to get great. But typically most people, it will be above that SLA. And then the next goal is eight SLO service level objective.

James Smith 00:48:57 Now, serverless service level, objective, the way we see it, we call it a stability. Target. Your target stability is more aspirational. It’s more like, okay, look, what are our peers in the market doing for this? And where do we want to get over time? Yeah. So the sweet spot then is in theory, set those SLS and SLS correctly is to be somewhere in between the two. And the sweet spot is to have those lines get closer and closer together over time. As you get more understanding about the quality of your application. And so setting those SLS, SLS is not something you just do as an engineering team, you need to talk to your business counterparts or your product team counterparts. And once you’ve done that, you end up with this really great symbiotic relationship forming between the product team and the engineering team, where I’ll give you a great example.

James Smith 00:49:45 One of our customers, in the hospitality space, they were launching a premium hotels feature. And during the launch of that premium hotels feature, they dropped below their, critical stability. And, so they actually discussed, they told me they discussed at a board meeting, that, Hey board of directors, I know you’re excited about this premium premium hotels feature, but we’re not going to ship it on time. And this is why, and it wasn’t the engineering team saying that it was the product team saying that. So they’ve got this, like buy-in between the two parts of the R D department, for lack of a better word of like, we agree that this is going to be a negative customer experience if we drop below this number. And so this what we’ll do. So that is really important that the, what do you do about it is the kind of the final piece of the puzzle.

James Smith 00:50:33 I think, as, as you alluded to, if you’ve got targets, you’ve agreed upon you’re measuring you have targets that you’re, you’re, you’re agreed upon with the product team. What do you do about it next? Well, there’s a bunch of approaches here. the old way to do things like with QA would be to toss problems over the fence, and some companies do this and that’s fine. What I mean by this is having a team of developers that just fix bugs. Now, I don’t know how many developers you’ve met the, super excited about spending all their day, fixing bugs. They definitely exist. I have met them before. I haven’t.

Priyanka Raghavan 00:51:07 Okay. Not every developer.

James Smith 00:51:16 I think that builds the wrong. This is subjective. There’s my opinion now. But I think that builds the wrong attitude in an engineering team. I think that being trans wise more and more is the code that you write as a software engineer. You own for a long time, you don’t just build it. And it’s like, back in the day you used to, when you shipped Microsoft office, they print it on a CD and you’d ship it to best buy. And someone apply it from the store. Like that’s not how it works anymore, where you’ve written code. You’re going to see that code again, probably Toro. and so having ownership after things go to production, I think is a critical mindset for software about it should be obvious, I think, to a lot of designers, but I think it’s a critical mindset to have.

James Smith 00:52:01 And so having another team that fixes bugs, I think is a bad idea. One in terms of actual positive approaches, I’ve seen two that work really well. One of them is ownership based on who touched the code last. So if you see it are, the blood comes into production, it’s been prioritized based on using bug snags rankings or whatever you’re using. So someone in JIRA is ranked it up to the right level. Then ideally the person who touched that code last is going to be the expert in that area. Now that’s not always so straightforward because it might be that someone did a commit that changed or tabs to spaces in the entire code base. Now their names all over the whole, the whole code base. But if you use get blame, it will tell you who lost touch the code. And maybe that’s a good way to assign things.

James Smith 00:52:45 Now, a better way of doing it is less granular is what team owns this code base. Now, a lot of teams are migrating to using get hubs code owners file format. I’m not sure if you’ve seen this, but you can define now in a file in your repo, this is who owns this part of the code base. So it’s less finger pointing, then it’s not like, Oh, James changed tabs to spaces. And now he made this bug. it’s more, this team is responsible for this area. So I think that’s a really good approach and alternative approach if you haven’t yet adopted those techniques is, is to do, I think Airbnb call it a bug sheriff, or we used to it bug warrior at my previous company. The idea is that you’re on rotation. So you and probably a buddy and engineering buddy, are going to be responsible for triaging, prioritizing and fixing bugs on a rotation rotating basis.

James Smith 00:53:40 And really this shouldn’t be too frequently. You should set up the rotation such that the, you only bug warrior one out of every five, six weeks at the shortest time period. But the idea here is that you, hopefully it might not be code that you’ve written, but it does give you empathy for other parts of the code base. So it still gives you empathy and ownership around the software that you’re delivering to your customers. So it really depends on how sophisticated you want to be, but I think you can’t actually triage and fix bugs effectively unless you commit to a process for doing so. And that’s you really good techniques I like for, for doing that.

Priyanka Raghavan 00:54:17 Right. So, so I guess to sort of summarize first, you know, obviously you, you set up like benchmarks, measure it, look at the data and then, you know, rank by however, either you’re using your software or, or whatever your favorite thing when somebody goes in and ranks it. And then of course, then the part about who actually goes and fixes it instead of blaming it, you know, sort of assign it to a team member or to an end to an entire team. And, you know, do like various approaches. Like he said, I like this bug warrior. so that kind of thing. And then, go and fix the bugs. So, and also one important thing is the person, like the team who wrote the code should be the ones who fix it. So don’t like toss it away. I think, I think that that’s pretty much what a lot of companies are doing, but yeah, it’s good to sort of retreat that, through,

James Smith 00:55:10 Well, I’ll tell you what the one thing to definitely not do is to wait for customers to complain. I think that no matter if you’re using bug snag and you’ve got a bug warrior, and if you’ve got great geometry triaged or whatever you’re doing, right, like data-driven, it’s not that hard to adopt a data-driven approach to prioritizing and fixing and remediating bugs. So like, yes, there’s great tools out there, but even if you want to build your own stuff or make your own algorithms or rank them your own way, don’t for customers to complain because by the time customers are complained, probably 50 other customers have already left your product. So that’s the thing that I still see a lot of Kings doing and it scares me when I see that. Hmm.

Priyanka Raghavan 00:55:52 Okay. This is great, James. I think, I think that’s probably all I have, I guess I would just before I end, I think I just, I was wondering if you could, again, tell me a little bit about that stability score, because that’s one thing that I, is that, how, how do you, how do you do that? Could you just like, sort of reiterate, cause that was really that peak my interest. So can we just like go with it?

James Smith 00:56:14 Oh, okay. Yeah, yeah, yeah. So, so effectively we want to help people understand what percentage of, of user interactions with your product all failed to break it down a little bit more specifically, let’s say you’re in a JavaScript application, a react application we will detect if there are unhandled exceptions, we’ll detect if they’re unhandled promise rejections or would detect if there’s an exception that was in an, a callback like I was talking about earlier, if any of those situations happen, or if you’re using a, a re a framework like react, but also detect errors of bubble up to a react Arab boundary. And so depending on the platform, we have basically built these hooks to hook into failure States in your product. So sure these are still going to cause your customers to have a bad experience. Our product doesn’t magically stop bugs from happening, but it will detect when they happen.

James Smith 00:57:07 And then that all feeds into the stability score. So if your session had any of those scenarios happen, counselors have failed session and there’s different techniques on different platforms, but it’s always the same underlying concept. I want to know which customers had a positive experience, what percentage of that customer base. So that’s how we calculate it. We’ve got a, we’ve got an article on our blog about this as well. That talks about it a bit more in depth. Okay. That’s really interesting. So, again, I think it was a great conversations with tanks James. I think, it’s probably ends our session, but you know, if listeners want to, you know, reach out to you, is there, you know, a Twitter handle that they can reach out to, would you like to, yeah, I’m not super active on Twitter, but I do check my messages. I’m I’m at Luke J L O P J. and I’m also on LinkedIn as well. You can find me I’m I’m James James Smith is a very common name, but if you talk James Smith, Bugsnag into LinkedIn, you’ll find me pretty quickly. And also, I bugsnag.com is our website as well. And you can see I’ve got plenty of blog posts and things on there as well. Okay. Thank you very much. this is, Priyanka Raghavan signing off for software engineering video. Thanks for listening.

[End of Audio]

SE Radio theme: “Broken Reality” by Kevin MacLeod (incompetech.com — Licensed under Creative Commons: By Attribution 3.0)